Most modern applications are assembled from open-source components, with developers typically writing less than 15% of the code for their applications. As the demand for open-source software grows, there’s also an increase in the number of available open-source software. However, not all open-source components are created equally or maintained properly. As a result, we are seeing an increase in software supply chain attacks. According to the 8th annual software supply chain report, the average growth rate of software supply chain attacks is 742% per year. There’s also been an increase in protestware with developers intentionally sabotaging their own open-source components, such as the case of the Colors and Fakers components, which resulted in a denial of service attack. We’ve also seen developers themselves being the target of these attacks, where malicious programs are installed on their machines when they download open-source components such as Python libraries.

Below are five important steps that you can take to secure your organisation’s software supply chain.

The first, is to have a software bill of materials (SBOM). This is a list of all the open-source components, including their versions, that makes up your applications. Having an SBOM allows you to quickly understand your organisation’s exposure when vulnerabilities are discovered. There should also be an owner for each application to allow for easy determination of who’s responsible for maintaining the code.

The next step is to perform due diligence on all open-source components that your organisation uses. We already do that for commercial software & suppliers, and the same needs to be done for open-source components. We need to make sure that the components we use are free from any known defects (vulnerabilities). There are Software Composition Analysis (SCA) tools that can scan your applications for vulnerabilities. As more effort is required to remediate vulnerabilities that are already in production, these SCA tools are often deployed in the build pipeline to ensure that there are no known vulnerabilities before the application is released. However, given the trend where developer machines are sometimes the target of software supply chain attacks, these scans should also be done before the components are downloaded onto the developer’s machine. Regular scanning of production applications is also required to detect any newly discovered vulnerabilities. Other due diligence that should be done includes only using components from reputable sources and staying clear of unpopular components or components that have a single developer. Popular components get more public scrutiny, with any vulnerabilities more likely to be detected, and using components developed by a single person represents a key person risk.

Your organisation should only be using approval components that have already been scanned for malware and vulnerabilities, and having a centralised artifact repository helps with that. Having a centralised artifact repository provides the assurance that you are downloading the component from its official source. This helps address typo-squatting and dependency confusion attacks, which are popular software supply chain attack approaches. It is recommended to use an SCA tool with rules or policies in place to determine when a component is approved for use automatically. Some organisations also use a centralised artifact repository to reduce the number of open-source suppliers. Rather than having 15 different components that provide the same functionality, they would limit the use to only the highest quality component.

When using an open-source component, you should always use the latest version. This would mean you have the latest bug fixes and security fixes. You should also be proactive and patch the components in your production application regularly whenever newer versions become available. Patch management is crucial in managing your software supply chain, and it can very quickly get out of control. If you let your components get stale, it can be quite hard to remediate should a security issue be discovered, as there might be many breaking changes in the newer fixed version that will also need to be addressed. Successful approaches that I’ve seen for this include having a policy where teams should update all stale components when making new changes, having dedicated time set aside each month and automating the upgrades using tools like Dependabot by GitHub.

There are times when fixed versions of open-source components are not yet available after a security vulnerability has been disclosed or when a proof of concept or exploit kit is released. There are also times when your development teams require additional time to remediate. Having a Web Application Firewall (WAF) allows you to secure the organisation while the fix is being applied.

Modern applications are mostly made up of open-source components. Unlike the code that your developers write, you have little visibility into the secure development practices of open-source developers. Our dependency on open-source components is going to increase over time, and implementing these five steps will help secure your organisation’s software supply chain.

In today’s interconnected world, where industrial systems and critical infrastructure underpin the backbone of society, operational technology (OT) security has never been more crucial. As industries evolve, particularly within critical sectors such as energy, healthcare, and manufacturing, they increasingly rely on OT systems to control physical processes. However, these systems are also vulnerable to cyber threats, placing essential services and national security at risk. Operational Technology (OT) security architecture provides the blueprint for safeguarding these systems, ensuring that critical infrastructure remains resilient and functional despite sophisticated attacks.

With the introduction of the SOCI Act (Security of Critical Infrastructure Act) in Australia and similar legislative measures globally, a growing demand for robust security frameworks that protect against cyber threats is growing. The Australian market, in particular, has seen a heightened focus on OT security as organisations seek to comply with government mandates and protect vital infrastructure. In this blog, we’ll explore OT security architecture, its importance, and the key elements that ensure its effectiveness.

At its core, OT security architecture refers to the structured design and implementation of security measures to protect OT systems. These systems often include industrial control systems (ICS), supervisory control and data acquisition (SCADA) systems, and other technologies for managing critical infrastructure such as power grids, water treatment plants, and transportation networks. Unlike traditional IT systems, which handle data and communications, OT systems directly impact physical processes. Any disruption to these systems can lead to catastrophic consequences, including power outages, water contamination, or worse—mass casualties.

The rise of digital transformation has introduced new challenges. As OT systems become more interconnected with IT systems via the Industrial Internet of Things (IIoT) and other advancements, the attack surface for cybercriminals expands. This integration has made it easier for hackers to infiltrate OT environments, putting vital assets at risk. As a result, a comprehensive security architecture is needed to address the legacy systems and the evolving technological landscape.

One of the fundamental aspects of OT security architecture is network segmentation and zoning. This involves dividing the OT environment into distinct zones based on function and security needs, ensuring that sensitive or critical zones are isolated from less secure areas.

For example, OT systems often include safety-critical processes, such as controlling power generation, and less critical systems, such as office networks. These should be segregated to prevent an attack on a lower-priority system from impacting core operations. A common approach is implementing a demilitarised zone (DMZ) that acts as a buffer between the OT and IT environments. Firewalls, intrusion detection systems (IDS), and other security measures should be deployed to monitor traffic and control access between zones.

According to a 2023 report by the Australian Cyber Security Centre (ACSC), 74% of attacks on OT systems could have been mitigated or prevented through proper network segmentation. This underscores the importance of maintaining strict controls over how different systems communicate with each other.

Real-time monitoring is essential in identifying threats before they have the chance to disrupt OT operations. Given the critical nature of OT environments, even minor disruptions can lead to significant downtime or damage. By implementing tools such as Security Information and Event Management (SIEM) systems and other monitoring solutions, organisations can detect anomalies and respond to threats in real-time.

In the Australian market, companies increasingly invest in Security Operations Centres (SOCs) that provide round-the-clock surveillance and incident response for OT environments. SOCs are vital in ensuring that security teams can swiftly respond to cyber incidents. Per the SOCI Act’s guidelines, organisations involved in critical infrastructure must maintain incident response capabilities and report any breaches to the government within a designated timeframe.

Moreover, incident response plans tailored to OT systems should be in place. These plans should account for the unique characteristics of OT environments, including the need to minimise disruption to physical processes during an attack. A coordinated response effort involving both IT and OT teams is essential for mitigating the impact of security incidents on critical infrastructure.

In recent years, governments worldwide have introduced regulations to protect critical infrastructure. In Australia, the SOCI Act mandates that organisations managing critical assets implement robust security measures and report any significant security incidents. The Act covers a broad range of sectors, including energy, healthcare, water, and telecommunications, all of which rely on OT systems for their core operations.

Compliance with the SOCI Act involves not only the implementation of technical safeguards but also ongoing risk management practices. Organisations must conduct regular security assessments, patch vulnerabilities, and ensure that security measures are current. Failure to comply with these mandates can result in significant penalties and reputational damage.

The global focus on securing critical infrastructure has led to a sharp increase in investment in OT security. The 2023 Global Critical Infrastructure Security Market Report indicates that the OT security market is projected to grow by 7.5% annually, driven largely by government regulations and the increasing complexity of cyber threats. In Australia, this trend is particularly pronounced, with organisations investing heavily in technology and personnel to meet the requirements of the SOCI Act.

To illustrate the importance of OT security architecture, let’s consider the case of the Australian energy sector. Energy companies rely heavily on OT systems to manage the generation, distribution, and transmission of power across the country. In recent years, several energy companies have faced sophisticated cyberattacks aimed at disrupting these processes. For instance, a 2021 report from ACSC revealed that a ransomware attack on an energy provider could have caused widespread blackouts if it hadn’t been for robust OT security measures.

The company in question had implemented a segmented network that isolated critical systems from less important ones. Additionally, real-time monitoring tools detected the threat early on, allowing security teams to neutralise the attack before any physical processes were impacted. This case underscores the value of investing in comprehensive OT security architecture.

The security architecture of OT forms the basis for ensuring the safety of our critical infrastructure. In today’s environment of increasingly sophisticated cyber threats, it is imperative for industries to implement comprehensive OT security measures to protect their operations and uphold national security. Crucial components such as network segmentation, real-time monitoring, and adherence to legislative frameworks like the SOCI Act are essential for safeguarding OT environments.

Given that Australia and the world at large rely heavily on OT systems to drive industries and deliver vital services, the significance of securing these systems cannot be overstated. For organisations operating in the Australian market, particularly those involved in critical infrastructure, the message is clear: invest in OT security architecture to prevent potential catastrophic disruptions in the future. If you want to learn more about how to protect your organisation’s OT environment and ensure compliance with the SOCI Act, contact our team of experts or subscribe to our newsletter for the latest updates on OT security trends.

In the realm of cybersecurity, threat modelling stands as an essential pillar of effective risk management. As Chief Information Security Officers (CISOs), we are inundated with frameworks, methodologies, and best practices that promise to fortify our organisations against an ever-evolving landscape of threats. Nevertheless, with over two decades of experience in cyber assurance, I am resolute in my belief that it is imperative to question the established norms of threat modelling. It is critical to rigorously assess whether traditional approaches to threat modelling truly align with our strategic objectives or if they have regressed into mere exercises in compliance and checkbox security.

The current threat modelling process focuses on identifying assets, enumerating threats, and mapping them to vulnerabilities. Popular frameworks such as STRIDE, PASTA, or OWASP are commonly used. However, these models often overlook real-life cyber-attacks that keep CISOs up at night, mainly focusing on easily defendable known threats. They assume a static threat landscape, neglecting adversaries’ constant innovation and the evolving cyber warfare landscape.

Traditional threat modelling is typically static and created during early system development or periodic security assessments. This doesn’t align with the dynamic nature of cyber threats. Attackers are adaptive and unpredictable, making static threat models obsolete. CISOs must use real-time threat intelligence to bridge the gap between assessments and real-time defences.

The idea that a threat model can cover all possible threats is a complete fallacy. No threat model can predict every potential threat, no matter how thorough it is. Believing in the completeness of these models can lead to a dangerous sense of complacency. To truly enhance security, we must acknowledge the limitations of threat modelling and be proactive in preparing for the unexpected, moving beyond the rigid frameworks dominating the field.

Conventional threat modelling often focuses too much on technical threats and overlooks other important types of risks. As CISOs, it’s crucial to consider a broader range of threats, including strategic, operational, and geopolitical risks. Ignoring insider threats, supply chain vulnerabilities, and state-sponsored attacks in traditional models will be futile. A strong threat model should encompass the broader ecosystem in which our organisations operate.

Threat modelling has become a compliance exercise rather than a genuine effort to understand and mitigate risk. This leads to shallow threat models that provide little value and create a false sense of security. As CISOs, we must view threat modelling as a critical component of our risk management strategy, requiring ongoing attention and refinement rather than treating it as a checkbox exercise to satisfy regulatory requirements.

If traditional threat modelling is flawed, what’s the alternative? The answer lies in embracing a more dynamic approach—one that is continuously updated and informed by real-time threat intelligence.

Dynamic threat modelling recognises that the threat landscape constantly changes and that our defences must evolve. It’s about moving away from static, one-time assessments and towards a more fluid and adaptive process. This requires integrating threat modelling into the organisation’s broader security operations rather than treating it as a separate exercise.

This means leveraging automation and machine learning to continuously monitor and update threat models based on the latest threat intelligence. It also means fostering a culture of continuous improvement, where threat models are regularly reviewed and revised, considering new information.

Dynamic threat modelling is not about creating perfect models—it’s about creating models as resilient and adaptable as the threats they are designed to mitigate.

Beyond becoming more dynamic, threat modelling needs to move beyond the technical weeds and engage with the organization’s broader strategic objectives. This requires a shift in mindset from focusing on individual vulnerabilities and attack vectors to considering the overall risk landscape in which the organisation operates.

As CISOs, we align cybersecurity with the business’s strategic goals. This means understanding the organisation’s risk tolerance, most critical assets, and position within the broader market and geopolitical environment. Strategic threat modelling should start with these high-level considerations and work downward rather than vice versa.

For instance, in industries like finance or healthcare, where data is a critical asset, the threat model should focus not just on how data breaches might occur but on the potential impacts of such breaches on the organisation’s reputation, regulatory compliance, and customer trust. Similarly, threat models should consider the risks posed by third-party vendors and geopolitical events that could disrupt operations in industries with significant supply chain dependencies.

This strategic approach requires collaboration across the organisation, bringing together stakeholders from different departments to ensure that all aspects of risk are considered. It also requires the CISO to sit in executive discussions, where cybersecurity is treated as a critical business issue rather than a technical one.

The cybersecurity industry is full of “best practices” enshrined in standards and frameworks, but they can stifle innovation and lead to a one-size-fits-all approach. In threat modelling, this emphasis can result in rigid adherence to established frameworks, even when they’re not the best fit for an organisation.

As CISOs, we need to be willing to challenge these norms, develop custom threat modelling approaches, reject elements of popular frameworks that don’t align with our goals, and foster a culture of continuous learning focused on outcomes.

As CISOs, we are uniquely positioned to drive the transformation of threat modelling within our organisations. It starts with recognising the limitations of traditional approaches and confidently challenging the status quo. We must advocate for a more dynamic, strategic, and business-aligned approach to threat modelling.

To do this effectively, we must build strong relationships with other leaders within the organisation and ensure that cybersecurity is seamlessly integrated into every aspect of the business. We must also invest decisively in the tools and technologies that enable real-time threat intelligence and dynamic threat modelling.

Most importantly, we must foster a culture of resilience and adaptability within our security teams. This means boldly encouraging creativity and unconventional thinking and rewarding those who confidently challenge established norms and push the boundaries of what is possible.

The future of threat modelling depends on our willingness to embrace change and think beyond traditional frameworks. As CISOs, we must push for a dynamic, strategic, and business-aligned approach to threat modelling. It’s not just about improving security posture; it’s about thriving in a world of evolving cyber threats. Threat modelling should be a dynamic, strategic process that evolves to meet organisational needs.

In 2024, Australia experienced a significant transformation in its digital landscape. Technological advancements, particularly in artificial intelligence, cloud computing, and IoT, have revolutionised daily life and work. However, this progress has also led to a surge in cybersecurity threats, underscoring the urgent need for robust application security measures. We have seen a remarkable surge in security incidents in the first of the year. This pivotal moment demands a proactive approach to safeguarding our digital infrastructure.

The first half of 2024 has seen a disturbing surge in cyberattacks targeting Australian businesses, government agencies, and critical infrastructure. These incidents have ranged from ransomware attacks on healthcare providers, which crippled essential services, to data breaches at major financial institutions, exposing sensitive personal information of millions of Australians.

In March, a significant cybersecurity breach at a prominent Australian financial institution brought attention to the critical vulnerabilities in application security. The attackers exploited an insecure API, leading to unauthorized access to sensitive customer data, including financial records and personal identification details. This breach sent shockwaves through the financial sector, prompting a crucial revaluation of the adequacy of current application security measures across various industries.

Furthermore, the continued rise of ransomware attacks has been particularly troubling. In June 2024, a ransomware attack on a major Australian healthcare network disrupted services across multiple hospitals, delaying critical medical procedures and compromising patient care. The attackers exploited a vulnerability in a third-party application for scheduling and communication, highlighting the risks posed by insecure applications within critical systems.

These events serve as a stark reminder that as applications become more integral to our daily lives, the need for rigorous security measures becomes paramount.

In the current landscape, application security stands as a fundamental component of any cybersecurity strategy. It encompasses rigorous measures and best practices aimed at fortifying applications against malicious attacks and guaranteeing their seamless operation without vulnerabilities. This approach entails steadfast adherence to secure coding practices, routine vulnerability assessments, and the uncompromising implementation of robust security protocols across the entire software development lifecycle.

Secure Coding Practices:

Developers, listen up! Secure coding is the bedrock of application security. It’s crucial to equip yourselves with the skills to craft code that stands strong against prevalent attack vectors like SQL injection, cross-site scripting (XSS), and buffer overflows. By adhering to coding standards and guidelines and harnessing automated tools for static code analysis, we can markedly diminish the likelihood of introducing vulnerabilities during the development phase.

Regular Vulnerability Assessments and Penetration Testing:

Regular vulnerability assessments and penetration testing are imperative in identifying and mitigating security flaws before attackers can exploit them. These tests must be conducted routinely and following any significant changes to the application or its environment. In 2024, Australian businesses have increasingly acknowledged the critical nature of these practices, incorporating them as a standard part of their security protocols.

Secure Software Development Lifecycle (SDLC):

It is absolutely crucial to integrate security into every phase of the software development lifecycle. This requires including security requirements from the very beginning, conducting thorough threat modelling, and consistently performing rigorous security testing. The adoption of DevSecOps practices, where security is seamlessly integrated into the development process rather than treated as an afterthought, has been a prominent trend in 2024.

Third-Party Risk Management:

The events of 2024 have underscored the critical need for organisations to conduct thorough assessments of third-party vendors’ security posture and enforce stringent controls to mitigate the risks associated with external applications and APIs.

Education and Awareness:

Finally, education and awareness are vital components of application security. In 2024, Australian organizations have increasingly invested in training programs to ensure that developers, IT professionals, and end-users understand the importance of security and are equipped to recognize and respond to potential threats.

Recognising the growing cyber threat landscape, the Australian government has taken proactive steps to bolster national cybersecurity. The revised Australian Cybersecurity Strategy 2024 emphasises the need for robust application security and promotes collaboration between government, industry, and academia to develop and implement good practices.

The strategy includes initiatives such as the establishment of a national application security framework, which provides guidelines for secure application development and encourages the adoption of security standards across all sectors. Additionally, the government has introduced incentives for businesses that prioritise application security, including tax breaks and grants for organisations that invest in secure software development practices.

Industry collaboration has also been a key focus, with organisations across various sectors coming together to share threat intelligence and best practices. The formation of sector-specific cybersecurity task forces, such as those in finance, healthcare, and critical infrastructure, has facilitated the development of tailored application security measures that address the unique challenges faced by different industries.

As Australia undergoes digital transformation, the importance of application security cannot be overstated. The events of 2024 have highlighted vulnerabilities in our digital ecosystem. Prioritising application security can protect sensitive data, maintain public trust, and ensure the resilience of critical systems. This collective commitment is essential for building a secure and resilient digital landscape for all Australians.

I’m certain you’ve heard many concerns from CISOs who are struggling to gain visibility into cloud environments despite their considerable efforts and resources.

Many professionals face this common issue around the 6 to 8 months mark of organisations’ cloud transition journey. Despite significant time and resource investments, they begin to view the transition to the cloud as a costly misstep, largely due to the range of security challenges they confront.

These challenges often stem from a lack of understanding or misconceptions within the company about the nuances of cloud security.

I am consistently astonished by the widespread nature of these challenges!

Here are some of the common misunderstandings regarding security in the cloud.

Since the transition to the cloud has become popular, senior leaders often claim that “the cloud is much more secure than on-premise infrastructure.” There is some truth to this perception. They are often presented with a slick PowerPoint presentation highlighting the security benefits of the cloud and the significant investments made by cloud providers to secure the environment.

It’s a common misconception that when organizations migrate to the cloud, they can relinquish responsibility for security to the cloud provider, like AWS, Google, or Microsoft. This mistaken belief leads them to think that simply moving their workloads to the cloud is sufficient. However, this oversight represents a critical security mistake because organisations still need to actively manage and maintain security measures in the cloud environment to ensure the protection of their data and infrastructure.

The operational model of cloud computing is based on a shared responsibility framework wherein the cloud provider assumes a significant portion of the responsibility for maintaining the infrastructure, ensuring physical security, and managing the underlying hardware and software. However, it’s important to understand that as a cloud user, you also bear the responsibility for configuring and securing your applications and data within the cloud environment.

This shared responsibility model is analogous to living in a rented property. The property owner is responsible for ensuring that the building is structurally sound and maintaining common areas, but as a tenant, you are responsible for securing your individual living space by locking doors and windows.

In the context of cloud computing, when you launch a server or deploy resources on the cloud, the cloud service provider does not automatically take over the task of securing your specific configuration and applications. Therefore, it’s essential to recognise that you must proactively implement robust security measures to protect your cloud assets.

Understanding this shared responsibility model is crucial before embarking on your cloud security program to ensure that you have a clear understanding of your role in maintaining a secure cloud environment.

Teams new to the cloud often hold biased views. Some assume that the cloud is inherently more secure than on-premises, while others believe it to be insecure and implement excessive controls. Making either mistake can lead to complacency and potential breaches, or make the work of cloud teams more difficult. This typically occurs when there’s a lack of investment in training the cybersecurity team in cloud security.

They often struggle to fully harness cloud technology’s potential and grapple with understanding its unique operational dynamics, leading to mounting frustration. It’s crucial to recognise that both cloud-based and on-premises infrastructures entail their own set of inherent risks. The key consideration lies not in the physical location of the infrastructure but rather in how it is effectively managed and safeguarded. Prioritising the upskilling of your cybersecurity team in cloud security before embarking on the migration process is essential, as this proactive approach ensures that your organisation is equipped to address potential security challenges from the outset.

It’s crucial to bear in mind that directly transferring on-premises solutions to the cloud and assuming identical outcomes is a grave error. Cloud environments possess unique attributes and necessitate specific configurations. Just because a solution functions effectively on-premises does not ensure that it will perform similarly in the cloud.

Migrating without essential adaptations can leave you vulnerable to unforeseen risks. Whenever feasible, it’s advisable to utilise native cloud solutions or opt for a cloud-based version of your on-premises tools, rather than expecting seamless universal compatibility.

The Cloud is a different paradigm and a completely different approach to operations. It is not a one-time solution; you can’t just set it up and forget about it. Treating it like a project you complete and then hand over is a guaranteed way to invite a data breach.

When it comes to your IT infrastructure, it’s crucial to recognise that the cloud is an independent and vital environment that requires an equivalent level of governance compared to your on-premises setup. Many organisations make the mistake of treating cloud management as a secondary or tangential responsibility while focusing primarily on their on-premises systems. However, this approach underestimates the complexities and unique challenges of managing cloud-based resources.

It’s important to dedicate the necessary attention and resources to effectively govern and manage your cloud infrastructure in order to mitigate risks and ensure seamless operations.

Assuming that the responsibilities for managing your on-premises environment will seamlessly transition to the cloud is a risky assumption to make. Many organisations overlook the critical task of clearly defining who will be responsible for implementing security controls, patching, monitoring, and other essential tasks in the cloud. This lack of clarity can lead to potentially disastrous consequences, leaving the organisation vulnerable to security breaches and operational inefficiencies.

It is important to establish a formally approved organisational chart that comprehensively outlines and assigns responsibilities for cloud security within your organisation. This ensures that all stakeholders understand their roles and accountabilities in safeguarding the organisation’s cloud infrastructure.

Furthermore, if your organisation intends to outsource a significant portion of its cloud-related activities, it is imperative to ensure that your organisational chart accurately reflects this strategic decision. This will help to align internal resources and clarify the division of responsibilities between the organisation and its external cloud service providers.

In the realm of cloud security, it’s crucial to address common misconceptions to ensure a robust and effective security posture. Organisations must understand that cloud security is a shared responsibility, requiring active management and maintenance of security measures within the cloud environment. Additionally, it’s important to recognise that both cloud-based and on-premises infrastructures entail inherent risks,

I did my FAIR analysis fundamentals course a few years ago and here are my thoughts on it.

FAIR stands for Factor Analysis of Information Risk, and is the only international standard quantitative model for information security and operational risk. (https://www.fairinstitute.org/)

My interest to learn more about FAIR came from two observations.

The first was that we had many definitions of what constitute risk. We refer to “script-kiddies”as risks. Not having a security control is referred to as risk. SQL injection is a risk. We also said things like “How much risk is there with this risk?”

The other observation was with our approach at quantifying risk. We derived the level of risk based on the likelihood and impact. And sometimes it was hard to get agreement on those values.

Having completed the course, one of the things I like about FAIR is their definitions. Their definitions of what is a risk, and what it must included. It should include an asset, threat, effect with a method that could be optional. An example of a risk is the probability of malicious internal users impacting the availability of our customer booking system via denial of service.

It uses future loss as the unit of measurement rather than a rating of critical, high, medium & low. The value of future loss is expressed as a range with a most likely value along with the confidence level of that most likely value. As such it focuses on accuracy rather than precision. I quite like that as it makes risk easier to understand and compare. Reporting that a risk has a 1 in 2 year probability of happening with a loss between $20K to $50K but likely being $30K is a lengthy statement. However it is more tangible and makes more sense than reporting that the risk is a High Risk.

Now it sounds like I’m all for FAIR, but I have some reservations. The main one being that there isn’t always data available to determine such an empirical result. Risk according to FAIR is calculated by a multiplication of loss frequency (the number of times a loss event will occur in a year) with loss magnitude (the $ range of loss from productivity, replacement, response, compliance and reputation). It’ll be hard to come up with a loss frequency value when there is no past data to base it on. I’ll be guessing the value and not estimating it. FAIR suggests doing an estimate for a subgroup if there isn’t enough reliable data available, but again I see the same problem. The subgroup for loss frequency is the multiplication of number of time the threat actors attempt to effect the asset with the percentage of attempts being successful. Unless you have that data, that to me is no less easier to determine.

Overall it still feels like a much better way of quantifying risk. I’ll end with a quote from the instructor. “Risk statements should be of probability, not of predictions or what’s possible.” It resonated with me as it is something I too often forget.

Imagine you are a security manager being asked to do a security assessment on a new software for your organisation. It will be deployed across all Windows workstations and servers and operate as a boot-start driver in kernel mode, granting it extensive access to the system. The driver has been signed by Microsoft’s Windows Hardware Quality Labs (WHQL), so it is considered robust and trustworthy. However, additional components that the driver will use are not included in the certification process. These components are updates that will be regularly downloaded from the internet. As a security manager, would you have any concerns?

I would be, but what if it were a leading global cybersecurity vendor? Do we have too much assumed and transitive trust in cybersecurity vendors?

The recent CrowdStrike Blue Screen of Death (BSOD) incident has raised significant concerns about the security and reliability of kernel-mode software, even when certified by trusted authorities. On July 19, 2024, a faulty update from CrowdStrike, a widely used cybersecurity provider, caused thousands of Windows machines worldwide to experience BSOD errors, affecting banks, airlines, TV broadcasters, and numerous other enterprises.

This incident highlights a critical issue that security managers must consider when assessing new software, particularly those operating in kernel mode. CrowdStrike’s Falcon sensor, while signed by Microsoft’s Windows Hardware Quality Labs (WHQL) as robust and trustworthy, includes components that are downloaded from the internet and not part of the WHQL certification process.

The CrowdStrike software operates as a boot-start driver in kernel mode, granting it extensive system access. It relies on externally downloaded updates to maintain quick turnaround times for malware definition updates. While the exact nature of these update files is unclear, they could potentially contain executable code for the driver or merely malware definition files. If these updates include executable code, it means unsigned code of unknown origin is running with full kernel-mode privileges, posing a significant security risk.

The recent BSOD incident suggests that the CrowdStrike driver may lack adequate resilience, with insufficient error checking and parameter validation. This became evident when a faulty update caused widespread system crashes, indicating that the software’s error handling mechanisms could not prevent catastrophic failures.

For security managers, this incident serves as a stark reminder of the potential risks associated with kernel-mode software, even when it comes from reputable sources. It underscores the need for thorough assessments of such software, paying particular attention to:

1. Update mechanisms and their security implications

2. The scope of WHQL certification and what it does and does not cover

3. Error handling and system stability safeguards

4. The potential impact of software failures on critical systems

While CrowdStrike has since addressed the issue and provided fixes, the incident has caused significant disruptions across various sectors. It has also prompted discussions about balancing rapid threat response capabilities and system stability in cybersecurity solutions.

In conclusion, this event emphasises the importance of rigorous security assessments for kernel-mode software, regardless of its certifications or reputation. Security managers must carefully weigh the benefits of such software against the potential risks they introduce to system stability and security.

I am writing this post in a week when we saw the most significant IT outage ever. A content update in the CrowdStrike sensor caused a blue screen of death (BSOD) on Microsoft Operating systems. The outage resulted in a large-scale disruption of everything from airline travel and financial institutions to hospitals and online businesses.

At the beginning of the week, I delved into the transformation in software developers’ mindsets over the last few decades. However, as the root cause of this incident came to light, the article transitioned from analysing the perpetual clash between practice domains to advocating for best practices to enhance software quality and security.

Developers and security teams were often seen as opposed to security practices over the millennium’s first decade. This is not because they did not want to do the right thing but because of a lack of a collaborative mindset among security practitioners and developers. Even though we have seen a massive shift with the adoption of DevSecOps, there are still some gaps and mature integration of software development lifecycle, Cybersecurity and IT operations.

The CrowdStrike incident offers several valuable lessons for software developers, particularly in strengthening software development cybersecurity programs. Here are some key takeaways:

Security by Design: Security needs to be integrated into every phase of the SDLC, from design to deployment. Developers must embrace secure coding practices, conduct regular code reviews, and use automated quality and security testing tools.

Threat Modelling: Consistently engaging in threat modelling exercises is crucial for uncovering potential vulnerabilities and attack paths, ultimately enabling developers to design more secure systems.

DevSecOps: Incorporating security into the DevOps process to ensure continuous security checks and balances throughout the software development lifecycle.

Cross-Functional Teams: Encouraging collaboration among development, security, and operations teams (DevSecOps) is crucial for enhancing security practices and achieving swift incident response times.

Clear Communication Channels: Establishing clear channels for reporting and communication channels can help ensure a coordinated and efficient response.

Security Training and Awareness: Regular training sessions on the latest security trends, threats, and best practices are vital for staying ahead in today’s ever-changing digital landscape. Developers recognise the need for ongoing education and understand the importance of staying updated on evolving security landscapes.

Balancing Security and Agility: Developers value security measures that are seamlessly integrated into the development cycle. This allows for efficient development without compromising on speed or agility. Implement security processes that strike a balance between robust protection and minimal disruption to the development workflow.

Early Involvement: It is crucial to incorporate security considerations from the outset of the development process to minimise extensive rework and delays in the future.

Preparedness for Security Incidents: Developers should recognise the need for a robust incident response plan to quickly and effectively address security breaches. They should also ensure that their applications and systems can log security events and generate alerts for suspicious activities.

Swift Incident Response: It is important to have a well-defined incident response plan in place. It is crucial for developers to be well-versed in the necessary steps to take when they detect a security breach, including containment, eradication, and recovery procedures.

5. Supply Chain Security and Patch Management

Third-Party Risks and Software Integrity: Developers must diligently vet and update third-party components. To effectively prevent the introduction of malicious code, robust measures must be implemented to verify software integrity and updates. This includes mandating cryptographic signing for all software releases and updates.

Timely and bug-free Updates: It is essential to ensure that all software components, including third-party libraries, are promptly updated with the latest security patches. Developers must establish a robust process to track, test, and apply these updates without delay.

Automated Patch Deployment: Automating the patch management process can reduce the risk of human error and ensure that updates are applied consistently across all systems.

Regular Security Audits: Regular security audits and assessments effectively identify and address vulnerabilities before they can be exploited.

Feedback Loops: Integrating feedback loops to analyse past incidents and strengthen security practices can significantly elevate the overall security posture over time.

In conclusion, the recent IT outage resulting from the CrowdStrike incident unequivocally emphasizes the critical need for robust cybersecurity in software development. Implementing secure coding practices, fostering collaboration between development, security, and operations teams, and giving paramount importance to proactive incident response and patch management can undeniably elevate system security. Regular security audits and continuous improvement are imperative to stay ahead in the ever-evolving digital landscape. Looking ahead, the insights drawn from this incident should galvanise a unified effort to seamlessly integrate security into the software development lifecycle, thereby ensuring the resilience and reliability of digital systems against emerging threats.

Since I started in Cybersecurity, I have observed that cybersecurity has become dominated by insincere vendors and practitioners driven solely by profit.

As a cybersecurity leader, I have also noticed the increasing commoditisation of cybersecurity over the years, and it’s important for us to address this issue.

Going deeper into the motives, I realised the significance of my blog’s title. Initially, I chose the name to reflect the current state of cybersecurity, where my work felt repetitive and inconsequential, like an assembly line. However, regardless of the organisation, the same recurring non-technical issues persisted.

The primary challenge lies in the way security practitioners interact with the people they are meant to protect within these organisations. Those outside the security team are often victim-shamed and blamed for their perceived ignorance as if they are at fault for not prioritising cybersecurity in their daily work.

Security organisations sometimes oppress the very people they are meant to serve. This behaviour struck me as counterproductive and degrading, particularly as I familiarised myself with nonviolent communication and other conflict-resolution techniques. I wondered whether adopting peacebuilding methods could foster collaboration and alignment among stakeholders. While only a few security practitioners initially showed interest, the transition to DevOps and shift left strategy, emphasising these attributes, attracted like-minded individuals.

Additionally, the way users are treated is like the punitive and shaming approach commonly seen in the criminal justice system. However, this approach has not reduced crime or supported victims. On the other hand, restorative justice, which focuses on repairing the harm caused by crime and restoring the community while respecting the dignity of all involved parties, has shown promise.

Evidence suggests that traditional fear-based and shaming tactics have not effectively promoted user compliance in cybersecurity. Instead, creating a supportive workplace environment has been identified as a more practical approach to encouraging voluntary security behaviours.

In the rapidly evolving landscape of cybersecurity, organisations must adapt their approaches. Rather than relying on fear, uncertainty, and doubt (FUD)-based strategies, we must acknowledge the value of users as allies and reposition cybersecurity as a collaborative effort. This involves a fundamental shift in the industry, prioritising collaboration, understanding, and support to foster a culture of proactive cybersecurity measures. By moving away from fear-based tactics and embracing a more cooperative approach, organisations will be better equipped to mitigate threats and safeguard information.

My recent conference presentation on open-source security revealed a common theme. Audience members didn’t realise how pervasive open-source is. Everyone in the audience knew that their organisation uses a fair number of open-source components, but they thought that it only makes up a small percentage of their applications, at around 30% or less.

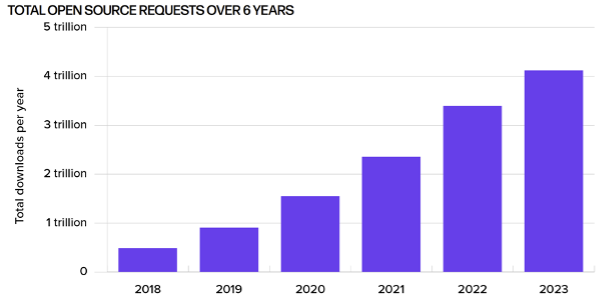

The truth is that open-source makes up the bulk of your applications. Industry reports have estimated that 85% of modern applications are built from open-source components. The percentage is higher for modern JavaScript web applications, with 97% of the code coming from open-source components. My analysis has found those numbers to be a low estimate, with the percentage for Java applications at around 98%. What was surprising was around three quarters of those open-source components were not explicitly incorporated into their applications, they were transitive dependencies. And with organisations embracing generative AI for software development, that 2% of custom code might not even be written by their developers.

Our use of open-source software is growing exponentially, with the number of download requests exceeding 4 trillion last year, almost doubling from two years ago. But a critical caveat exists, not all open-source offerings are created equal. Around 500 billion download requests made last year were for components with known risk. This is around 1 in 8 downloads of components that have one or more identified security vulnerabilities. Log4j is one such component. It had a critical vulnerability that was disclosed in December 2021 and resulted in most organisations enacting their incident response plans. Today, around 35% of download requests for log4j are for vulnerable versions. That’s 1 in 3 downloads. Why are we still downloading open-source components with known risk, especially components like log4j? I believe that for most organisations, they are unaware of their open-source consumption, especially for transitive dependencies.

Do you know your organisation’s open-source consumption? Do you have a software bill of materials? If you don’t then you’re probably using more open source than you realise.

By taking proactive steps to illuminate and manage open-source usage, organisations can harness the power of open source while mitigating associated security risks.